Agentic AI is not merely another assistant that autocompletes code. It is a class of systems that can make decisions, plan, and take action. Give it a goal like fix flaky tests on the main branch, move logging to OpenTelemetry, patch a security bug, and open a pull request, and it will break the work into steps, run the right tools, check the results, and loop until the job is done, with only light human oversight.

This shift affects everything: team roles, daily workflows, release habits, and the way success is measured. The upside is faster cycles, fewer routine headaches, and more consistent results. The downside is also real: hidden regressions, gaps in governance, and new security risks.

This article outlines what agentic AI is, how it’s changing the software lifecycle, and what engineers can do now to succeed in this new world.

Understanding Agentic AI and Why It Matters

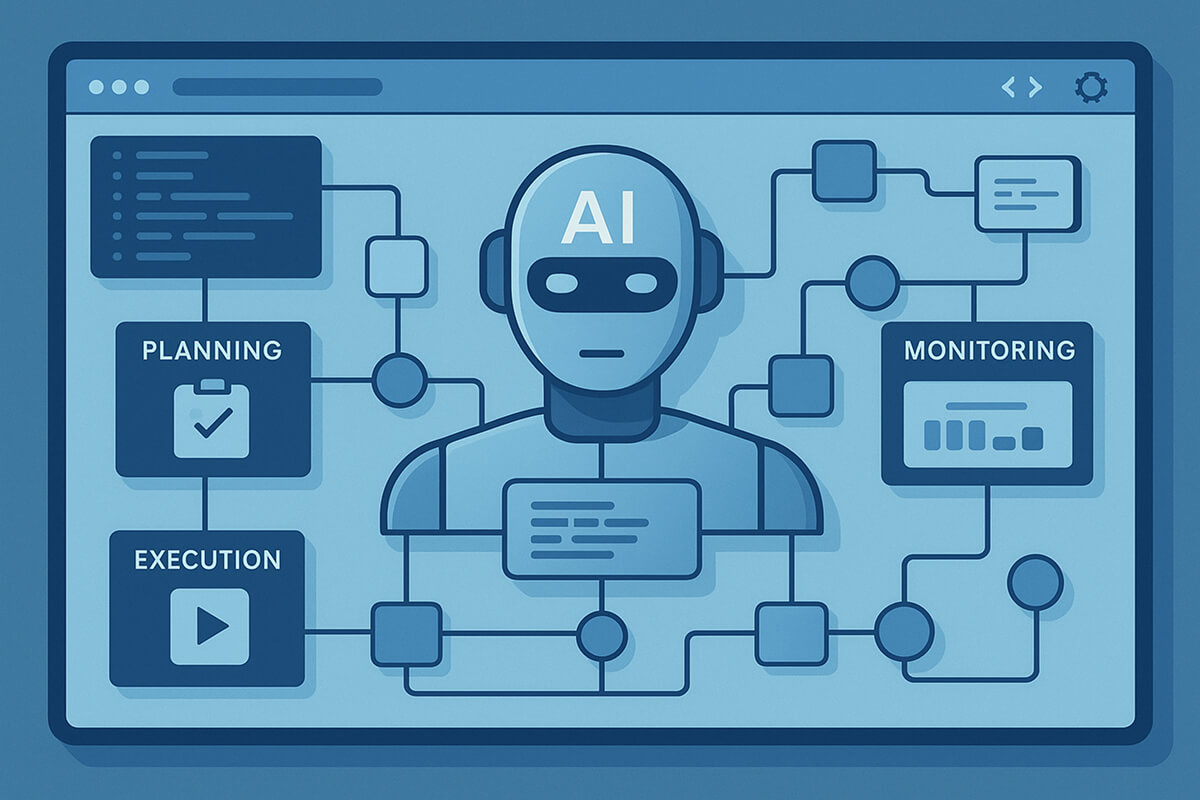

Agentic AI refers to AI systems that can decide, plan, and execute multi-step work with limited supervision, using tools and context to move toward a goal. That capability differs from “plain” generative AI (which produces content on request) and from autocomplete-style code assistants (which primarily respond to prompts line by line).

What is this different from Traditional AI or “plain” GenAI?

- Traditional ML: predictions inside a bounded task (classify, rank, forecast).

- Generative AI: produce content on demand (text, code, images).

- Agentic AI: decide what to do next, then do it, using tools and evidence to move toward a goal.

The crucial difference is autonomy. A code assistant suggests a function; an agent files a ticket, drafts the function, runs tests, opens a PR, and then reworks the patch if the build fails.

How is Agentic AI Evolving?

- Reactive → Proactive: from answering my prompt to noticing a problem and proposing a fix.

- Local → End-to-end: from single-file changes to multi-repo, multi-step workflows.

- Opaque → Observable: execution traces, inputs/outputs, and artifacts become first-class.

- Single agent → Multi-agent: specialized roles pass artifacts along a pipeline.

Core Capabilities at a Glance

- Reasoning: plans, dependencies, backoff/retry heuristics.

- Tool use: structured calls with least-privilege credentials.

- Memory/context: long-lived state, RAG over docs and code.

- Orchestration: planner-executor patterns; sub-agent handoffs with acceptance criteria.

How Agentic AI Is Disrupting Software Engineering

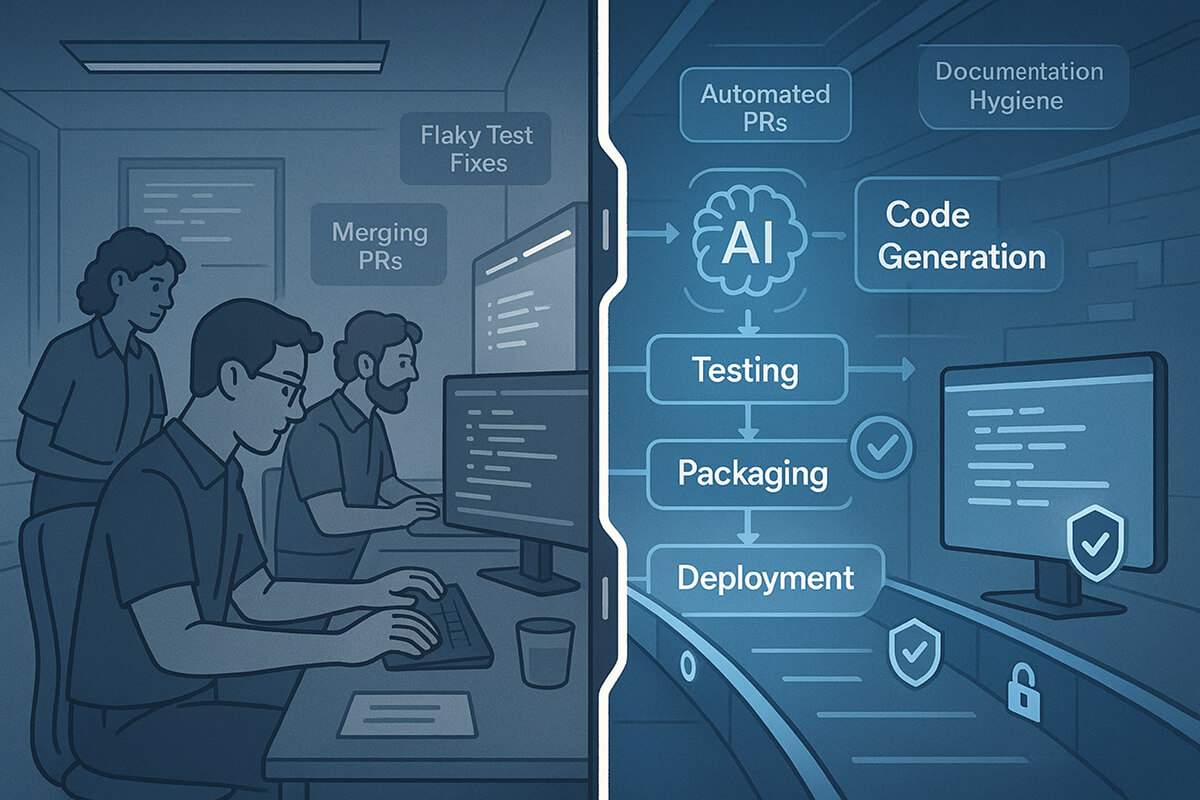

We’ve moved from assistants that help humans write lines of code to agents that automate entire workflows, including generation, testing, packaging, and even rollout, all under guardrails.

Early automation targets

- Boilerplate scaffolds, client stubs, and repetitive refactors.

- Dependency bumps with changelog checks and smoke tests.

- Flaky test quarantine and minimal-fix proposals.

- Documentation hygiene for READMEs, API references, and code comments.

- Ticket triage for clustering, deduplication, and first-draft reproduction steps.

Changing the Software Engineering Lifecycle

But agents don’t stop after those early targets. They can be integrated into every stage of SDLC. It helps write specs, suggests designs, adds tests, and even ships code. This part shows how one small change leads to many more.

Requirements & Discovery

- Agents summarize user feedback, classify bug reports by signature, and draft acceptance criteria.

- They extract business rules from specifications and create lists of non-functional requirements, such as error budgets, latency budgets, and guidelines for handling personal information.

Design

- They verify interfaces against current contracts and create UML/Mermaid diagrams for review.

Coding

- In addition to making snippets, agents also navigate call graphs, update modules that depend on them, and keep invariants across layers.

- They suggest small changes with clear reasons and links to tests that show the change works.

Testing

- Tests based on properties for edge cases, fuzz inputs for parsers, and snapshot tests for UI changes.

- Flake hunters split failures in half, put unstable tests in quarantine, and open targeted PRs.

Operations and Maintenance

- Automate runbooks for low-risk events, like changing the key, restarting a stateless service, or clearing the cache.

- IaC proposals for detecting configuration drift and canary rollout suggestions.

- Security hygiene: known-vulnerability upgrades during maintenance windows, along with SBOM updates and SBOM diffs.

Impact on Roles & Skills

With all these capabilities, there is no doubt that AI agents are significantly impacting the roles in software engineering. Most traditional roles are undergoing rapid changes with the advent of AI agents.

- Engineer → Supervisor/Integrator: fewer keystrokes, more intent writing, review, and integration.

- Tech lead → Orchestrator: define agent lanes, guardrails, and success metrics; manage the interplay of human and automated work.

- SRE/Platform → Policy owners: shape least-privilege tools, observability, audit, and change windows for agents.

- QA → Oracle designers: build tests and policies that agents have to follow; keep an eye on the health of the tests as a product.

Opportunities & Challenges

Agents can speed up work, cut bugs, and make life easier for developers. However, they also introduce new risks related to trust, rules, and security.

Opportunities

- Throughput: more PRs with smaller scopes and faster turnaround times.

- Quality: tests that are more varied, a consistent style, and fewer long-tail defects.

- Developer experience: less toil, fewer yak-shaves, faster onboarding.

Challenges

- Trust: How can we check agent changes at scale without slowing down the review process?

- Governance: tagging code that agents write, keeping track of who did what, and making sure everyone is accountable.

- Security: fast injection, using the wrong tools, and logs that show secrets.

- Ownership: licensing, provenance, and IP policies for generated code.

- Debt: Little fixes can add up to big problems without explicit ownership of the design.

What Engineers Need to Do to Thrive

Agentic AI changes the daily rhythm of software work. You’ll write less code by hand and spend more time setting goals, checking evidence, and drawing safe boundaries for fast AI teammates. The list below outlines the steps to transition into that new role.

1. Embrace the Mindset Shift

Move from “I must write every line” to “I must define intent, evidence, and boundaries.” Your leverage comes from:

- Writing goals as testable contracts (preconditions, postconditions, acceptance tests).

- Designing seam APIs and modules that are easy for agents to change safely.

- Expecting to curate and integrate more than you author.

Treat agents like teammates who are fast but literal. Provide clear objectives, robust guidelines, and concise permissions.

2. Upskill for the Agentic Era

Agentic work requires fresh skills: crafting concise prompts, chaining small tasks, utilizing tools effectively, and monitoring for security gaps.

1. Intent and prompt design

- Describe goals, constraints, and evidence required for success.

- Prefer small objectives chained together over a single, comprehensive “do everything” instruction.

- Make oracles explicit for which tests must pass, which metrics must not regress.

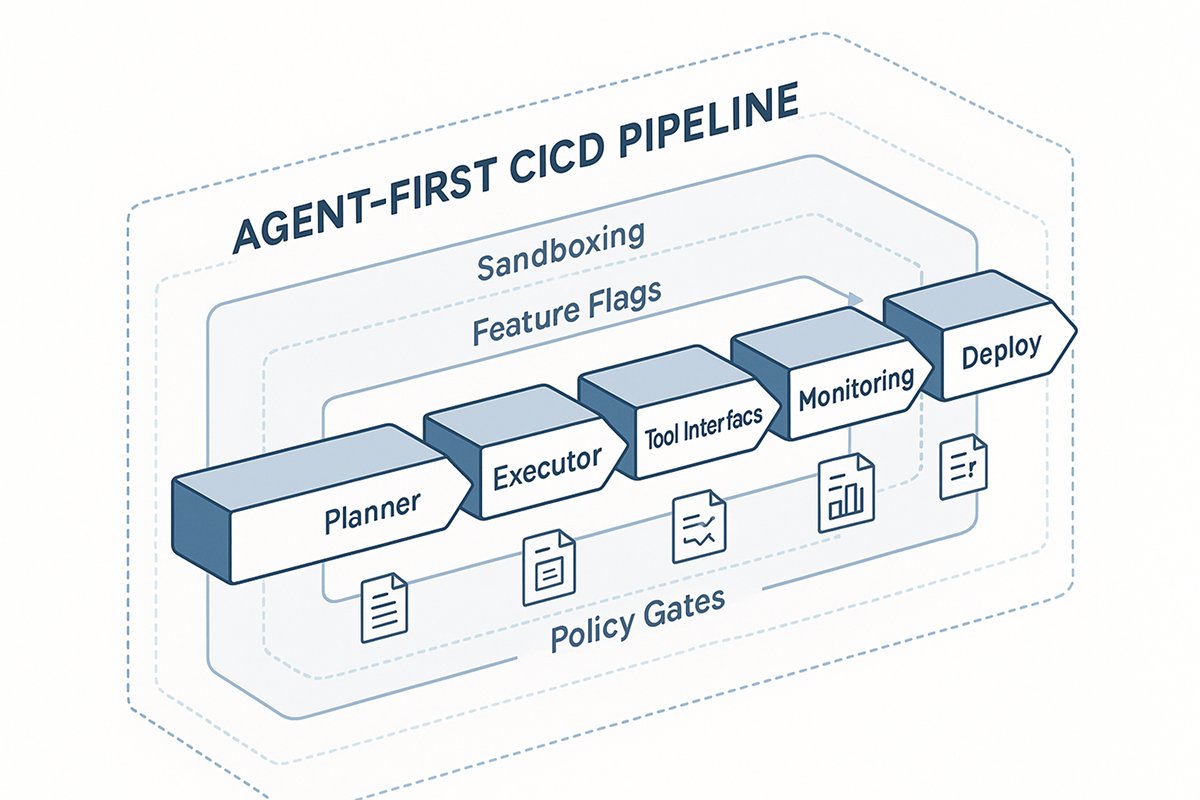

2. Agent orchestration

- Learn planner-executor patterns, sub-agent contracts, and failure modes (retry, backoff, circuit breakers).

- Understand how to structure handoffs, including artifact formats, acceptance criteria, and rollback paths.

3. Context/memory management

- Build compact, high-signal context packs, including ADRs, style guides, error budgets, and key interfaces.

- Use retrieval with guardrails: redaction, access controls, and version pinning.

4. Tool integration

- Wrap tools with least-privilege interfaces.

- Isolate credentials and rotate tokens.

- Log tool calls, excluding sensitive information.

5. Monitoring & observability

- Emit traces for each agent run, including prompt/version, tool calls, artifacts, and outcomes.

- Track false-fix rate, PR rejection reasons, and post-merge defects.

- Build dashboards that compare agent vs. human change outcomes.

3. Security awareness

- Validate inputs to tools (sanitize file paths, commands, URLs).

- Defend against prompt injection when agents read untrusted content (docs, logs, tickets).

- Gate high-blast-radius actions with policy and human approvals.

3. Architecting for Agent-First Workflows

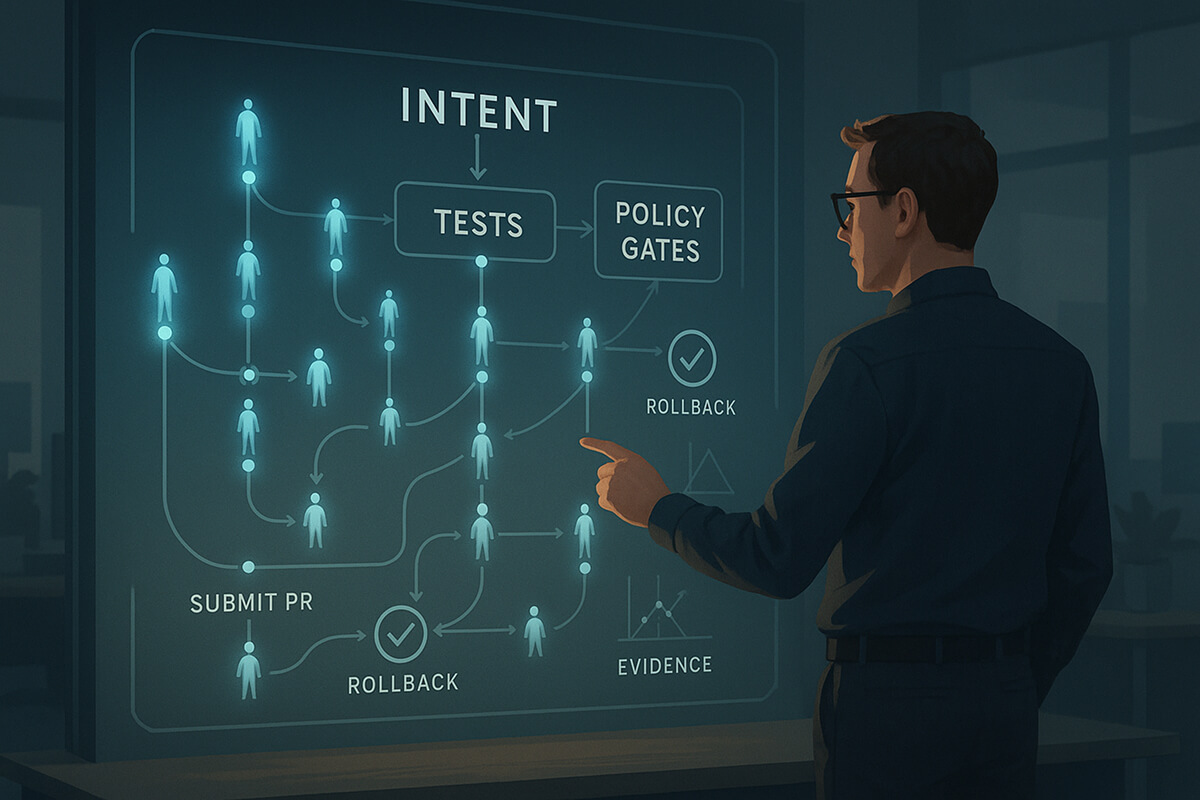

If your system isn’t built for agents, they’ll break it or you’ll throttle them. Start by writing verifiable contracts, limiting blast radius, and logging everything so agents can act safely and teams can trace every change.

1. Design for contracts, not vibes

- Stabilize interfaces and schemas; publish invariants as machine-checkable contracts.

- Adopt behavioral checks (pre- and postconditions) that agents can automatically assert.

2. Make “done” verifiable

- For each agent lane, define oracles, including unit/integration tests, lint rules, policy checks, and performance budgets.

- If success can’t be checked, keep a human earlier in the loop.

3. Separate plans from permissions

- Agents may propose high-impact actions (schema change, migration plan).

- Execution requires HITL approval, canarying, and change windows.

4. Observability from day one

- Log inputs/outputs for every tool call, with redaction.

- Pin model versions and prompts; store artifacts for replay.

5. Limit blast radius

- Run in sandboxes, forks, or feature branches.

- Use feature flags and canary rollouts for runtime changes.

- Enforce diff-size caps and “one-concern-per-PR” rules for agent submissions.

6. Integrate deeply with existing pipelines

- Version control: dedicated agents, branches, and labels.

- Code review: required reviewers, evidence templates, provenance tags.

- Testing frameworks: per-PR gates plus post-merge monitors.

- CI/CD: distinct lanes for agent-authored PRs with stricter checks.

Establish Governance, Trust, and Quality Guardrails

Speed without guardrails is risky. Before scaling agents, put policies, logs, and review gates in place so you can track who (or what) changed what and roll back quickly if it goes wrong.

1. Human-in-the-loop (HITL)

- Human attention is required for schema changes, infrastructure updates, and merges to protected branches.

- Even when using agents, humans should ask for evidence in PRs about:

- Why the changes were made

- What files were changed

- Results of the tests

- Differences in performance

- Steps to roll back the changes.

2. Policy as code

- Encode merge gates, risk thresholds, allowed tool actions, and change windows.

- Auto-block when: diff too large, tests insufficient, ownership unknown, or protected files touched.

3. Audit & provenance

- Immutable logs: prompts, model versions, tool calls, artifact hashes.

- PR labels for agent contributions (e.g., provenance: agentic).

- SBOM updates and license checks for any third-party code touched.

4. Risk management

- Sort agent lanes by blast radius: low (docs), medium (internal libs), and high (prod code, schemas).

- Do red-team exercises to test for prompt injection and tool abuse.

- Ensure disaster recovery by maintaining backups, conducting dry runs, and testing rollbacks.

Metrics that Matter (Outcomes, Not Hype)

Track agent lanes the same way you track services:

- Throughput: PRs/week from agents; time-to-merge vs. human PRs.

- Quality: post-merge defect rate; reverts within 7/14 days; escaped defects per KLOC.

- Reliability: false-fix rate; flake rate after quarantine; MTTR deltas for incidents touched by agents.

- Safety: policy-block counts by reason; % of agent PRs requiring escalation.

- Developer experience: review time distribution; context-switch reduction; time spent on toil.

If numbers improve and risk stays bounded, scale the lane. If not, tighten the oracle or reduce the scope.

FAQs

1. How does agentic AI change the relationship between software engineers and automation tools?

Engineers set intent and guardrails; agents execute the steps, produce evidence (tests, logs, diffs), and loop on failures. Humans review, approve, and control blast radius via policies, branches, and canaries.

2. What new roles or skills are emerging for engineers in agentic AI environments?

Supervisor/Integrator, Orchestrator (tech lead), QA-oracle, and Platform policy owner. Skills: intent writing, planner-executor orchestration, context/RAG curation, least-privilege tool wrapping, and security/metrics literacy.

3. How can organizations balance innovation and governance when adopting agentic AI?

Start with low-risk lanes and measurable “done” (tests, budgets, policy checks). Use policy-as-code, provenance tags, immutable logs, and require human approval for high-impact changes.

4. What ethical considerations arise when agentic AI systems make autonomous engineering decisions?

Accountability and transparency (who/what changed what and why), privacy and data minimization, safe tool use (no overreach), license/provenance tracking, and human oversight where judgment is needed.

5. How can traditional engineering teams transition smoothly to agentic AI-driven workflows?

Pilot one visible lane (e.g., dependency bumps + smoke tests), make success machine-checkable, separate proposals from execution, instrument runs end-to-end, train the team, and use canaries/rollbacks for safety.

Conclusion

Agentic AI won’t take engineers’ jobs. What it will do is eliminate tedious work and reward teams that provide the agent with clear goals, clean interfaces, and solid proof of success. Used well, it lets you ship faster, eliminate minor annoyances, and raise the overall quality bar; used carelessly, it can spread bad habits at machine speed.

How to stay on the winning side:

- Start small and visible. Pick one low-risk task and trace every step.

- Make “done” machine-checkable. Tests, budgets, and policy gates, not hunches.

- Keep humans in the loop where judgment matters. Reviews for high-impact changes.

- Treat agents like micro-services. They must be observable, permission-scoped, and governed by code.

If you follow this, you won’t just keep pace with the agentic shift, you’ll set the bar for responsible, measurable, and developer-friendly automation.