Unit testing is crucial to software quality, yet it remains one of development’s most tedious bottlenecks.

Developers often face an impossible choice: maintain rigorous test coverage or meet looming deadlines. AI-generated unit tests are changing this equation.

By leveraging machine learning to produce test suites automatically, these tools can eliminate hours of test writing done by developers. As codebases grow increasingly complex and delivery cycles shrink, AI testing tools are becoming a competitive necessity for teams that can’t afford to compromise on either speed or quality.

What are AI-generated unit tests?

AI-generated unit tests represent the intersection of artificial intelligence and software quality assurance. They are automated test cases created by AI systems rather than manually written by developers. These tests examine individual functions, methods, or classes to verify that they behave as expected across various inputs and conditions.

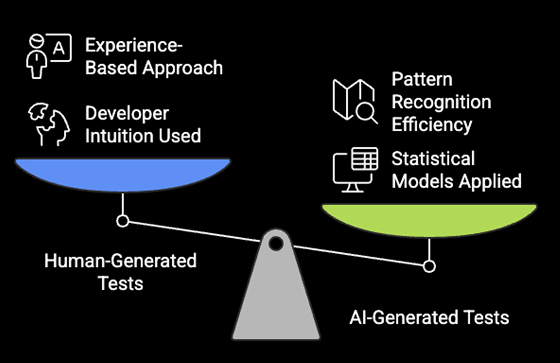

How do AI test cases differ from traditional unit tests?

Traditional unit tests typically follow this process:

- A developer writes code to implement a feature.

- The same developer (or another team member) manually creates test cases.

- Tests are run to verify the code’s functionality.

- Developers maintain tests as code evolves.

This manual approach has several drawbacks: it’s time-intensive, often subjective, and limited by the developer’s ability to anticipate edge cases.

In contrast, AI-generated unit tests:

- Analyze existing code to understand its structure and purpose.

- Automatically generate test cases covering various scenarios and edge cases.

- Create test code that can be run immediately or after developer review.

- Can continuously adapt as code changes.

How do AI-generated tests work?

AI-powered test generation employs several sophisticated techniques to create effective unit tests.

Code Analysis

Most AI test generators begin with static code analysis—examining the code without executing it. This analysis includes:

- Function signature analysis: Examining parameters, return types, and method names to understand what the code should do.

- Control flow analysis: Tracing the paths code execution might take through conditionals, loops, and exception handling.

- Data flow analysis: Tracking how variables are used and modified to identify boundary conditions and edge cases.

- Type inference: Determining appropriate test inputs based on parameter types and constraints.

For example, when analyzing a function that validates email addresses, AI should identify that testing should include valid emails, malformed addresses, empty strings, and lengthy inputs.

Natural Language Processing and Large Language Models

Modern AI test generators increasingly use large language models (LLMs) trained on vast code repositories to understand code intention and generate appropriate tests:

- LLMs can “understand” code comments and function names to infer expected behavior.

- These models learn from millions of existing test cases to recognize effective testing patterns.

- Some tools allow developers to provide test descriptions in natural language, which the AI translates into executable test code.

- Context-aware models can understand the surrounding code and the application domain to create more relevant tests.

For instance, GitHub Copilot or similar AI coding assistants can generate test cases based on function names and docstrings, predicting what a developer would write as a test.

Runtime Behavior Analysis

Some advanced tools incorporate dynamic analysis by:

- Executing code with various inputs to observe actual behavior.

- Performing fuzzing (providing random or unexpected inputs) to discover edge cases.

- Using symbolic execution to determine all possible code paths mathematically.

- Leveraging existing test cases to learn expected outputs for similar functions.

This approach is particularly valuable for complex functions where static analysis alone might miss subtle behaviors or dependencies.

Integration with Development Environments

Most AI test generators integrate directly with existing development workflows, such as:

- IDE plugins that suggest tests as developers write code.

- CI/CD pipeline integrations that automatically generate tests for new or modified code.

- Command-line tools that can be triggered during build processes.

- Git hooks that analyze code changes and propose appropriate tests.

Tools like Diffblue Cover, TestCraft, or TestSigma offer various integration options, making AI-generated tests accessible within developers’ familiar environments.

Benefits of automated unit test generation

Adopting AI-generated unit tests offers numerous advantages that can dramatically improve development efficiency and code quality.

Faster test creation

Perhaps the most immediate benefit is the significant time savings.

- Tests that might take hours to write manually can be generated in seconds.

- Boilerplate test code (setup, teardown, common assertions) is created automatically.

- Test scaffolding for new projects can be established immediately.

- Updates to tests when code changes are handled with minimal developer intervention.

A study by Diffblue found that developers spend approximately 35–50% of their time writing tests. AI test generation can potentially reclaim much of this time for more creative problem-solving.

Higher coverage

AI systems excel at identifying edge cases that human developers might overlook, such as:

- Comprehensive input boundary testing (null values, empty collections, maximum values).

- Tests for exception paths and error handling that developers often skip.

- Coverage of complex interactions between parameters and possible branching.

Many organizations report coverage improvements of 15–30% after implementing AI-generated tests, particularly for complex legacy codebases where manual test writing was incomplete.

Better code quality

More thorough testing directly translates to improved quality through:

- Early detection of bugs before they reach production.

- Identification of design flaws through testing difficulties.

- Promotion of cleaner, more modular code that’s easier to test.

- Consistent validation of code against requirements.

According to Microsoft research, projects with comprehensive unit tests experience 40–80% fewer bugs in production than those with minimal testing.

Improved developer velocity

By offloading test creation, developers can focus on core development tasks, leading to:

- Less context switching between implementation and test writing.

- Reduced cognitive load from having to think about both feature logic and test scenarios.

- Faster iteration cycles as tests are generated immediately after code changes.

- More time for creative problem-solving and feature development.

Teams implementing AI test generation typically report 20–30% improvement in development velocity, particularly for feature-rich sprints where testing normally creates bottlenecks.

Standardization

AI-generated tests promote consistency across projects and teams, such as:

- Uniform testing patterns, regardless of developer experience.

- Consistent naming conventions and test organization.

- Standardized approaches to common testing scenarios.

This standardization makes tests more maintainable and easier for team members to understand, regardless of who originally wrote the code being tested.

Choosing the right test case generation tools

With numerous AI-powered testing tools available, selecting the right solution requires careful consideration of several factors.

Language and framework support

Different tools specialize in different programming environments.

- Diffblue Cover: Specializes in Java applications, particularly those using Junit.

- CodeScene: Provides language-agnostic analysis with specific optimizations for popular languages.

- Testim: Excels with web applications using JavaScript frameworks.

- Symflower: Offers strong support for Go, Java, and C/C++.

Evaluate whether a tool supports not just your core language but also your specific frameworks and libraries. Some tools perform better with certain patterns (e.g., object-oriented vs. functional) or architectural styles.

Integration capabilities

Consider how seamlessly the tool fits into your existing workflow:

- IDE integration: Tools like Tabnine and GitHub Copilot offer inline test suggestions.

- CI/CD compatibility: Look for tools that integrate with Jenkins, GitHub Actions, or your preferred pipeline.

- Code repository hooks: Some solutions can automatically analyze pull requests.

- Test runner compatibility: Ensure generated tests work with your existing test execution environment.

The most effective tools require minimal changes to your development process while providing maximum value.

Customizability and control

Different projects require different levels of test customization:

- Assertion styles: Can you configure the assertion library or style used?

- Naming conventions: Does the tool follow your preferred test naming patterns?

- Test structure: Can you control how tests are organized and grouped?

- Configuration options: Look for tools that allow fine-tuning of test generation parameters.

For example, Diffblue allows customization of test style and coverage goals, while Symflower offers configuration of assertion depth and mock behavior.

Coverage reporting and analysis

Effective tools provide insights into testing effectiveness:

- Coverage visualization: Graphical representation of tested code paths

- Gap analysis: Identification of untested or poorly tested areas

- Quality metrics: Indicators of test strength beyond simple line coverage

- Trend tracking: Historical data showing testing improvements over time

Tools like Codacy and SonarQube integrate well with AI test generators to provide comprehensive quality reporting.

Cost and licensing

Consider the financial aspects:

- Open-source vs. commercial: Some solutions, like Randoop, offer free alternatives with more limited capabilities.

- Pricing models: Per-developer, per-repo, or enterprise licensing options are available.

- ROI calculations: Compare cost against projected time savings and quality improvements. Tools like Milestone are great for ROI calculation.

- Trial periods: Most reputable tools offer evaluation periods to assess their effectiveness.

Tools like Parasoft or Diffblue often provide the most comprehensive solutions for enterprise deployments but at higher price points than lighter-weight alternatives.

Challenges and considerations

While AI-generated tests offer significant benefits, they also come with limitations and considerations that teams should acknowledge.

Understanding test intent

AI-generated tests may execute perfectly but can sometimes miss the “why” behind testing:

- Generated tests validate what the code does, not necessarily what it should do.

- Business logic validation often requires a human understanding of requirements.

- Some edge cases might be technically valid but not relevant to actual usage.

- Many teams use AI generation as a starting point to address this, then review and refine tests to align with business requirements.

Dependency on high-quality data and models

The effectiveness of AI-generated tests depends heavily on:

- The quality and diversity of code repositories used to train the models.

- Availability of domain-specific examples in the training data.

- Continuous updates to AI models as programming practices evolve.

- Access to sufficient computing resources for sophisticated analysis.

- Teams should evaluate the training methodology behind any AI testing tool they consider adopting.

Human oversight remains critical

Perhaps most importantly, AI-generated tests should complement rather than replace human testing expertise:

- Developers should review generated tests for relevance and completeness.

- Some test scenarios still require human creativity to conceive.

- Critical systems may need additional manual validation beyond AI-generated tests.

- The “big picture” of system behavior requires human understanding.

The most successful implementations use AI to handle routine test creation while focusing human effort on complex scenarios and integration testing.

Concluding thoughts

AI-generated unit tests mark a major advancement in software quality assurance, enhancing test coverage while reducing developer workload.

These powerful tools can generate test cases with great coverage in seconds and help standardize testing patterns across teams. When selecting the right AI testing tool, consider things such as language compatibility, workflow integration, and customization options.

While powerful, AI tests work best with human guidance. They can tell you if the code works as written, but you still need humans to ensure it meets real-world needs.