Artificial intelligence has been assisting engineers for decades, optimizing compilers, suggesting code snippets, and flagging anomalies. However, there have been several breakthroughs in recent years (GPT 3 in 2020 and GPT 4 in 2023) that have reshaped the engineering landscape.

- Large-context language models can now hold hundreds of files or detailed subsystems in memory, such as GPT 4 Turbo with 128k tokens, Claude 3.5 Sonnet with 200k tokens, and Gemini 1.5 Pro preview with up to 1M tokens. This enables coherent multi-step reasoning across much larger scopes than before.

- On-demand GPU clusters and expanded cloud access make it feasible to run large numbers of parallel simulations, bringing autonomous exploration within reach economically.

- Open-source orchestration frameworks, such as LangGraph and AutoGen, enable teams to chain specialized models into agile, goal-seeking swarms with a relatively lightweight setup.

Together, these breakthroughs give rise to Agentic AI in software engineering, software that chooses its own objectives, critiques its output, and adapts on the fly.

What Is Agentic AI?

In simple terms, Agentic AI in software engineering refers to software that decides what to do next without being told each step. It watches the results, learns, and changes course, much like a junior engineer who grows with experience.

Why is this suddenly possible?

- Modern large-context language models, such as GPT, Claude, and Gemini, can hold entire codebases or factory layouts in memory, allowing them to reason across hundreds of files at once.

- Cloud GPU prices have fallen enough to run thousands of parallel simulations without blowing the budget.

- Open-source orchestration libraries (LangGraph, AutoGen, CrewAI) make it easy to chain specialized bots into a goal-seeking swarm.

Real-world examples

- Code agent: Can draft, test, and sometimes merge pull requests with minimal oversight, posting a summary in Slack by morning in experimental setups.

- Quality bot: Spins up staging environments, runs regressions, files defects, and even suggests the hot-fix. This is an early glimpse into how agentic AI in quality engineering can take over repetitive validation tasks.

- Data-pipeline guardian: Detects a failed ETL job, rewrites the SQL to handle a new schema, and backfills missed records. This represents a growing role for AI agents in data engineering.

- Design optimizer: Runs thousands of CAD simulations to shave grams off a drone frame without weakening it.

- SRE sentinel: Reroutes traffic, scales pods, and triggers rollbacks the moment latency spikes.

How Agentic AI Impacts the Engineering Landscape

Agentic AI applications in software engineering are shifting engineering from command-driven efficiency to goal-driven autonomy. You can almost feel the changes everywhere, from agents that write, test, and merge code while you sleep to data centers that fix themselves before an on-call alert even fires.

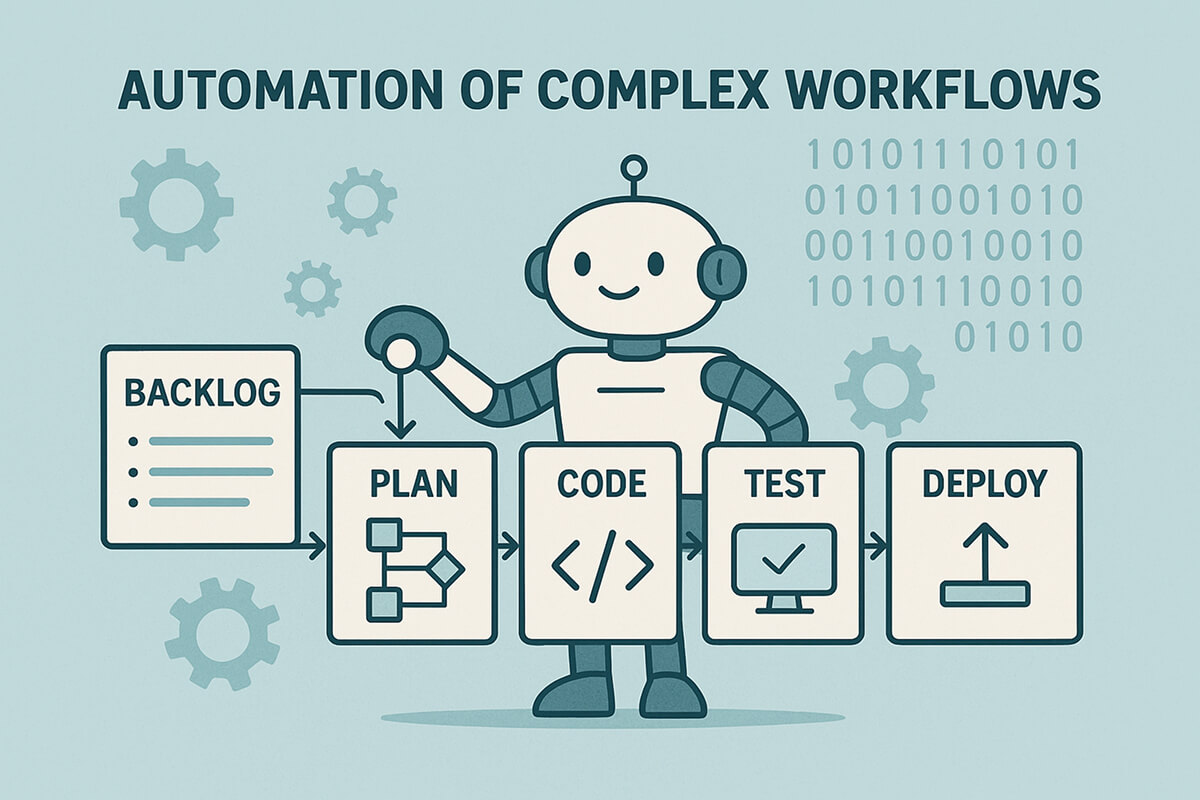

1. Automation of Complex Workflows

The first generation of developer bots focused only on linting. Today’s agentic systems can run the whole software-delivery pipeline from selecting backlog items to shipping code without needing a human at every step:

- Faster feedback loops: Merging a pull request can trigger build, integration, and test stages instantly. This helps identify bugs within minutes, allowing teams to iterate while context is fresh.

- Repeatable quality gates: CI/CD pipelines execute identical security scans, lint rules, and performance checks on every commit. With agentic AI in quality engineering, these gates can expand beyond static checks into dynamic test generation, fuzzing, and defect triage.

- Higher-value engineering time: Developers spend less effort on environment setup, dependency juggling, and manual deploys, and more on architecture decisions, code review, and optimizing critical paths.

- Simplified onboarding: Fewer hidden steps mean faster ramp-up and lower cognitive load for new developers

2. Enhanced Problem-Solving Capabilities

AI agents that can solve open-ended problems with little guidance are reshaping how engineers design, build, and run software systems.

- Fewer hard rules, more learning loops: Instead of encoding every edge case, teams feed agents logs, metrics, and domain docs. The model derives heuristics on the fly, cutting down brittle if-else logic.

- Continuous optimization at scale: Agents explore parameter spaces faster than grid or random searches. In backend services, this yields tighter latency budgets, lower cloud cost, and auto-tuned caching layers.

- Real-time decision loops: In production, the same agent can watch telemetry, predict drift, and trigger canary rollbacks or resource reallocation within milliseconds.

- New skill mix: Engineers now curate datasets, define reward functions, and audit decisions, blending ML-ops with classic software craft.

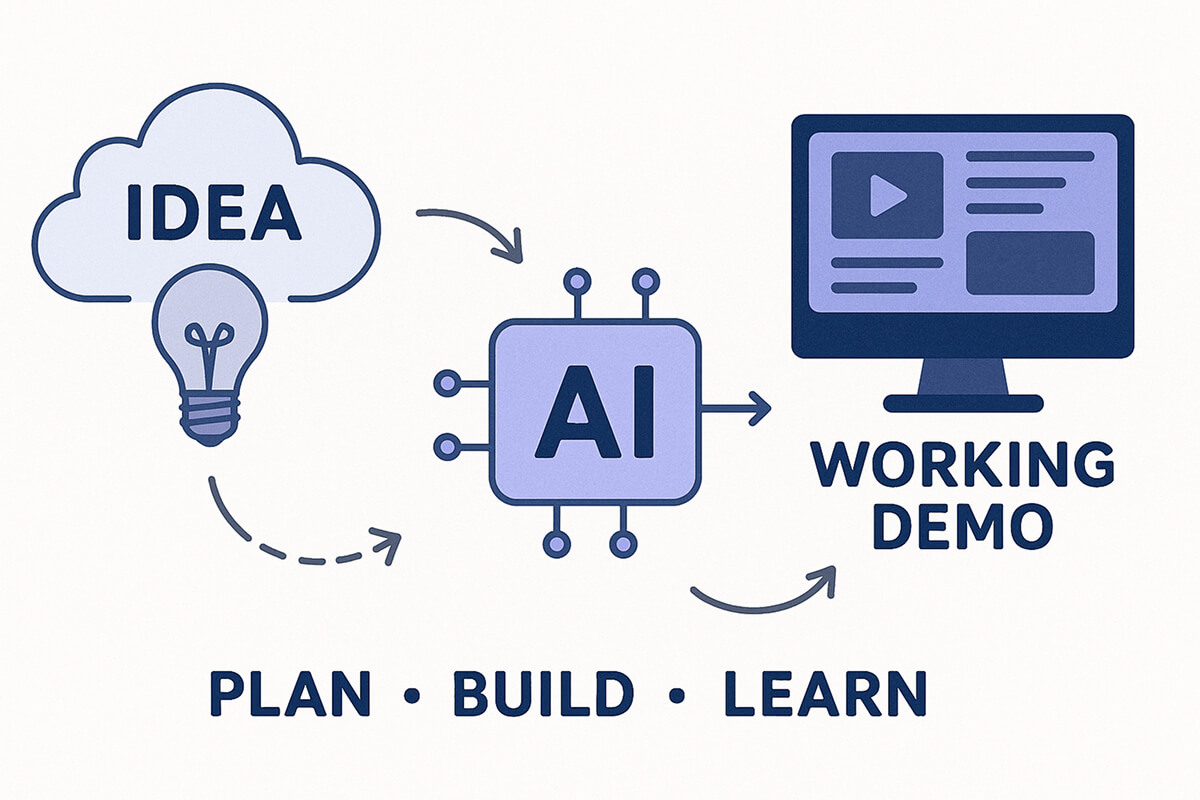

3. Acceleration of Innovation

AI-powered tooling shortens the distance between a rough idea and a working demo. It often uncovers design paths that humans would overlook. This speed-up changes how software teams plan, build, and learn.

- Prototype in a morning, not a month: Large-language-model copilots scaffold REST services, seed test data, and spin up container configs on command. Engineers can run an end-to-end spike by lunch and decide early if the concept warrants a full sprint.

- Cheap exploration of the “weird” branches: Instead of engineers hand-picking a few safe design tweaks, an AI agent can create hundreds of wild code or config variations in a throw-away test environment.

- Auto-generated benchmarks guide pivots: Every experiment the agent runs is measured, and the numbers auto-publish to a shared dashboard. When an agent discovers an unexpected optimization, the team can quickly replay, validate, and integrate it into the main code.

- Culture of rapid hypotheses: Because the cost of failure drops, product managers and architects feel freer to propose bold features. Sprint plans shift from “build X” to “test hypotheses A-D and double-down on the winner.”

4. Collaboration Between Engineers and AI Agents

AI agents now handle much of the hands-on coding and testing. This shift is giving rise to “AI-augmented engineering teams” that blend human judgment with machine speed and efficiency.

- From coder to coach: Engineers frame the problem, set acceptance tests, and review agent-generated pull requests rather than writing every line. Code reviews focus on architecture, security, and edge-case coverage.

- Rapid iteration loops: An agent can refactor, run unit tests, and push a staging build in minutes. Developers use the saved time to shape product roadmaps, define service-level objectives (SLOs), and design cross-service contracts.

- Shared CI/CD pipelines: Pipelines now include steps where agents suggest fixes, auto-tune configs, or roll back faulty deploys. Engineers supervise these steps and add guardrails, rate limits, policy checks, and explainability hooks to ensure accountability and transparency.

- New skill stack: Teams now need prompt engineering, data curation, and model-drift monitoring, alongside classic Git, API, and infra skills.

5. New Engineering Roles and Skills

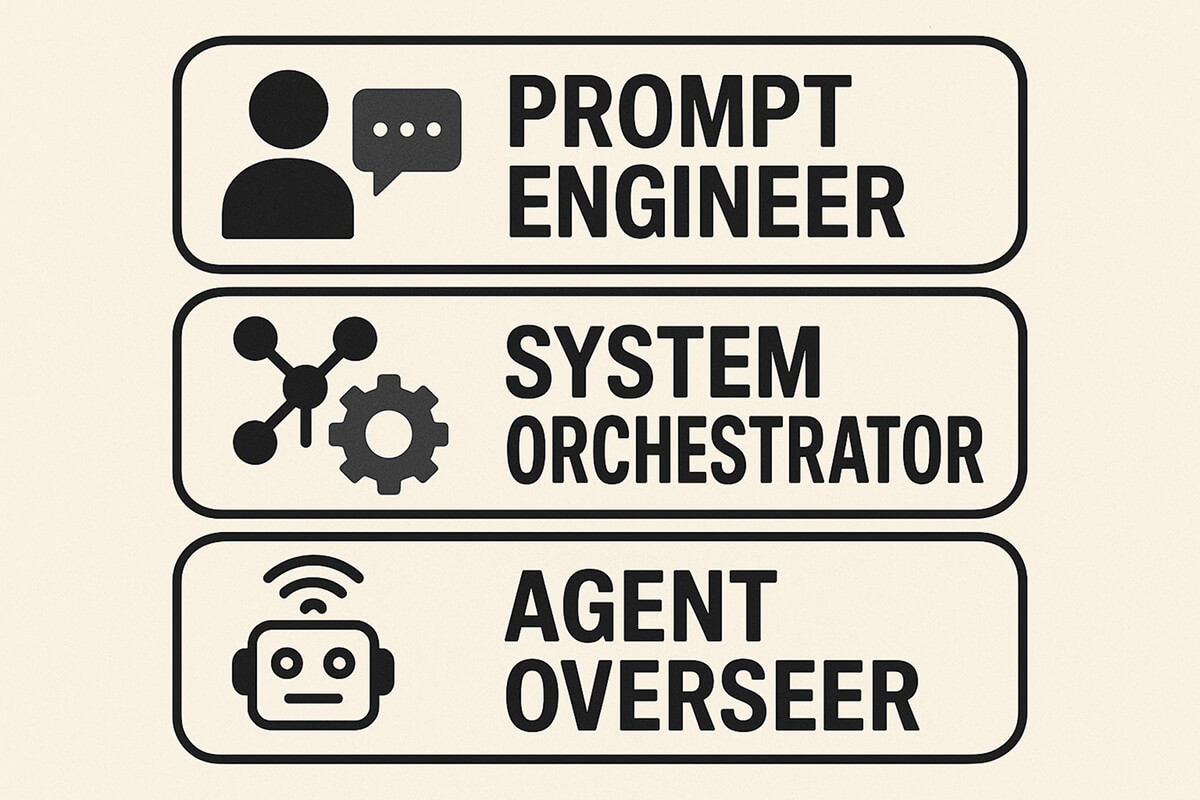

AI-first tooling is spawning job titles that have only emerged in the last couple of years, and it is reshaping the skills every coder needs.

What’s changing

- Prompt engineer: Treats model inputs like a new type of API. They design structured prompts, test edge cases, and version them in Git, just as we once tracked SQL migrations.

- System orchestrator: Glues together many small agents. They write workflow graphs, manage retries, and tune latency budgets so that chatbots, vector stores, and legacy microservices function as a single, coherent system.

- Agent overseer (AIOps): Watches model behavior in production. Dashboards track drift, cost, bias, and token spikes; overseers set guardrails and can hot-swap a faulty policy without a full redeploy.

Skills that rise in value

- AI literacy: Understanding model limits, vector search, and fine-tuning beats memorizing yet another web framework.

- Observability & governance: Engineers must add tracing for prompts, capture model outputs for audit, and enforce data-retention rules.

- Ethics and policy: Knowing privacy law, fairness principles, and open-source licenses becomes part of the design review, just like threat modeling is for security.

- Cross-domain fluency. Teams mix software, data science, UX, and legal expertise. Clear communication and shared vocab are now core competencies.

Challenges and Risks

Autonomous AI systems give teams new power, but they also introduce failure modes that classic software did not have to face.

Reliability ≠ determinism

- Large models can return different answers to the same prompt.

- Engineers must wrap them with retry logic, confidence thresholds, and circuit breakers, treating each call like an “eventually correct” microservice rather than a pure function.

Explain-before-trust

- Logs now need to capture prompts, model versions, and intermediate reasoning so reviewers can trace why an agent patched code or scaled replicas.

- This “decision lineage” becomes part of the incident post-mortem.

Security by design

- Prompt injection, jailbreaks, and data exfil through generated text are real threats.

- Pipelines should sanitize inputs, scope permissions per task, and scan outputs for policy violations just as we lint code for secrets today.

Safety and ethics gates

- Agents may recommend options that clash with privacy laws, bias rules, or user consent.

- Teams embed policy checks and human sign-off steps, much like change control boards in regulated industries.

Dependency risk

- Over-automation can erode human skills and situational awareness.

- Run regular “agent-down” drills, keep manual run-books updated, and ensure critical paths have a fallback mode that skips the model entirely.

FAQ - Agentic AI in Engineering

1. What challenges can companies face when adopting agentic AI?

If the data that feeds the AI is messy, its results will be messy too. Teams also need new skills, such as prompt writing and model monitoring, which require dedicated training time. Extra checks for privacy, bias, and safety can slow work until good routines are in place. Even then, people still need a clear backup plan for the moments when the AI gets things wrong.

2. What infrastructure is needed to integrate agentic AI into engineering teams?

First, you need a stable platform to host and version the large models, much like a code repo. A shared prompt store keeps everyone using trusted instructions and safety rules. Isolated test servers allow the AI to run risky experiments without affecting production. Good monitoring and AI-aware CI/CD steps catch slow, costly, or unsafe outputs before they go live.

3. How does agentic AI for prototyping differ from enterprise-level systems?

In prototyping, the goal is speed: small data, throw-away code, and “fail fast” attitudes that let ideas ship in hours.

Enterprise systems handle real users, so they add strict uptime targets, audit logs, and rollback plans. Every change is slower and more controlled because security, cost, and reliability now matter as much as speed. In short, prototypes show what’s possible; enterprise setups prove it’s safe.

4. How can engineering leaders measure the success of agentic AI adoption?

Look first at velocity; new features should reach production faster. Defect rates and outage minutes should fall because the AI catches problems early. Cloud costs per feature often drop once the AI handles tuning and scaling. Finally, adoption is key: if more teams choose the AI for daily tasks, it’s delivering real value.

Conclusion

By combining long-context language models, cheap cloud GPUs, and open-source orchestration, software agents can now write code, tune systems, and run experiments with minimal human input.

This gives engineers more time for planning and big-picture thinking, and it creates new jobs in prompt writing and AI oversight. However, because agents can make unpredictable or unsafe moves, teams must feed them clean data, add strong safety checks, and keep backup plans ready. Teams that find the right mix of speed and caution will derive the most value from this new approach to software development.