Using AI is no longer optional – it’s core to every role and every level. – Julia Liuson, Developer Division President at Microsoft

Large Language Models (LLMs) are rapidly becoming a part of mainstream engineering practice. This surge in adoption is not surprising. Modern LLMs in software engineering go beyond simple code autocompletion to reshape the way engineers design, develop, test, and maintain software.

Considering how LLMs help engineering teams focus more on higher-level problem-solving rather than on boilerplate tasks, the question is no longer whether to use LLMs, but how to effectively integrate them into the development lifecycle.

Why LLMs Matter in Software Engineering

By early 2025, over 15 million engineers were using GitHub Copilot, and there has been a fourfold increase in its usage year over year. Copilot’s AI-assisted coding covers nearly half of a developer’s code, resulting in around 55% faster task completion rates.

So, why are software developers adopting LLMs at this rate?

- Productivity accelerations: LLMs can automate repetitive coding tasks, boilerplate generation, and documentation.

- Code quality improvement: They provide better code coverage and identify violations of coding best practices.

- Knowledge accessibility: They can answer domain- or context-specific queries, allowing junior developers and non-technical personnel to easily understand the application state.

- Problem-solving: They help uncover edge cases, suggest alternative designs, and identify potential pitfalls during early development.

- Rapid prototyping: They can easily be used for generating prototypes to test design ideas, test multiple approaches, and validate ideas without spending weeks of effort.

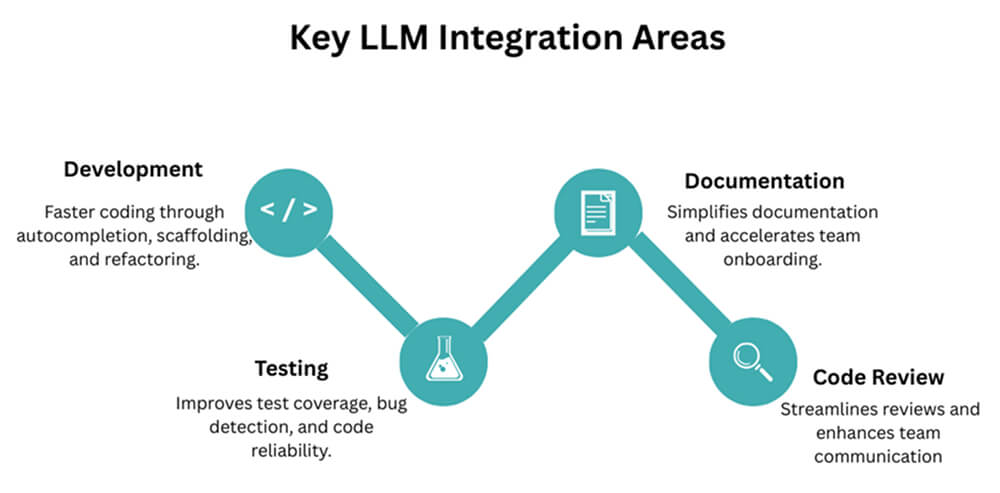

Key Integration Areas for LLMs

Within the software development life cycle, there are several key areas where you can practically embed LLMs.

1. Development

- Usage: Accelerated coding with intelligent autocompletions, scaffolding, and refactoring.

- Popular tools:

2. Debugging and Testing

- Usage: Improved code coverage with automated unit test generation and bug detection capabilities.

- Popular tools:

- Replit AI (test suggestion and robust debugging)

- Sourcegraph Cody (AI-powered unit testing)

- Claude 3 Sonnet (refactoring and debugging)

- Katalon Studio (AI-powered test co-authoring with GPT)

3. Collaboration and Code Review.

- Usage: Enhancing pull requests, summarizing commits, identifying antipatterns, and standardizing the code review process.

- Popular tools:

- GitHub Copilot PR Summaries

- Gemini Code Assist (integrated into GitHub for AI-powered PR reviews)

- Greptile (context-aware review comments on PRs)

4. Knowledge Management and Onboarding

- Usage: Quicker KTs across stakeholders and newly joined developers through auto-generated documentation, architecture overviews, and context-aware “ask-the-codebase” chat interactions.

- Popular tools:

- Claude 3 Opus (deep reasoning for system explanations)

- GPT-4.1/5, Gemini

Practical Strategies for Team Integration

However, arbitrarily adopting a tool isn’t enough. You need a well-planned strategy to successfully integrate LLMs into software engineering teams and workflows.

(Source: Freepik)

Embedding LLMs into Daily Development Workflows

The best way to get the most benefits from software engineering with LLMs is to integrate them right where the developers are already spending their time.

- Everyday coding: IDE plugins like Copilot, CodeWhisperer, and Tabnine can be used to handle mainly boilerplate and repetitive everyday tasks.

- More custom needs: API-driven integrations (example: design-to-code automations) allow LLMs to generate project-specific code snippets or documentation on demand.

- Documentation: Tools like Cursor (IDE) go beyond providing code generation capabilities to facilitating documentation/inline code explanation for deeper understandability.

- Collaboration: ChatOps bots can be embedded into daily communication channels like MS Teams and Slack for quick Q&As. Also, they help teams deploy, monitor, and manage incidents directly from the chat.

LLMs in the CI/CD Pipeline

To make sure the code quality and execution speed remain unchanged as the code moves from commit to production, you can integrate LLMs into the CI/CD pipelines.

- Test automation: Once the developer commits the written code, LLM-based tools can be used to generate test cases, ensuring thorough code coverage. This way, bugs are identified almost immediately before they move on to production.

- Static analysis: Tools like Sourcegraph Cody, Gemini Code Assist, and Claude Sonnet 4 provide AI-driven linting, style enforcement, and bug-detection. They can also be used to detect vulnerabilities and antipatterns.

- Release documentation: LLMs can generate concise, human-readable release notes and comprehensive comments from code changes, commit history, or Jira tickets.

- Continuous monitoring: LLM-backed dedicated tools can be integrated to monitor the codebase, flag anomalies, or suggest fixes to reduce production risks.

Enhancing Code Review and Pair Programming

You can involve LLMs at a deeper level to make reviews and collaborative coding faster, more consistent, and less error-prone.

- Pair programming: Tools like Cursor and Replit can become a developer’s AI teammate, offering context-aware suggestions, debugging help, and quick refactoring.

- Review assistance: Immediately following development, LLMs can highlight obvious issues like overlooked edge cases, mismatched naming conventions, best practice violations, etc. This way, human reviewers can spend more time on mission-critical code logic and design.

- Standardized reviews: Having prompt-based checklists ensures that every code change is checked against the same set of criteria. It brings more consistency into the workflow regardless of the reviewer.

Knowledge Management & Onboarding

One major pain point development teams face is maintaining up-to-date documentation as development progresses. It is absolutely necessary for keeping track of development over time, and also for making new developer onboarding smoother. AI in engineering workflows can address both these issues.

- Auto-synced documentation: LLM-based documenting tools like Mintlify and Swimm automatically generate or update documents from repos, PRs, or commits and reduce stale docs.

- Conversational interfaces: Tools like CodeBuddy (VS Code, JetBrains) and Cursor (IDE) come with chat interfaces that engineers can use to ask questions about the system in natural language, saving hours of digging through files.

- Architecture overviews: The high-level diagrams and summaries of complex systems generated through LLMs can provide newly joined team members with the contextual knowledge needed to get started.

Security, Privacy, and Compliance Guardrails

System security is an integral part that you cannot miss when using AI software development tools.

- Data protection: Sensitive tokens, credentials, and PII (Personally Identifiable Information) need to be redacted before the code is sent to an LLM.

- Controlled environments: Self-host models (using tools like Ollama) to keep proprietary code more secure.

- Safe-usage policies: As a team, you can enforce a clear set of rules on what engineers can and cannot paste into prompts to reduce accidental data exposures.

- Compliance: LLMs can also be configured to scan for license conflicts, insecure dependencies, and known vulnerabilities, and add another layer of governance.

Scaling Across the Organization

Once pilot adoption of LLMs is successful, it needs to be responsibly scaled across the organization.

- Reusable prompts: For common tasks, having shared prompt libraries and templates within teams can prevent developers from reinventing the wheel.

- Fine-tuning: Fine-tuning models on the codebase or industry domain takes up more effort and resources, but makes outputs more context-aware.

- Role-specific guides: Create “LLM playbooks” tailored for the use of different engineering roles within your team, such as backend, QA, and DevOps, to promote best practices.

- Metrics: Keep track of LLM usage patterns, developer satisfaction, and productivity gains.

FAQs

1. How can teams evaluate which LLM tools truly fit their workflow?

Start small with pilot projects. Test various tools to determine how well they integrate with existing IDEs and CI/CD pipelines. Track metrics like task completion time, code quality, developer satisfaction, and ease of use against the same use cases.

2. What are the security concerns of using LLMs in development pipelines?

Data leaking, insecure third-party integrations, secret exposure, and prompt injection attacks can cause significant damage. Therefore, it is crucial to redact sensitive data, define strict usage policies, and use enterprise-secured models to overcome this.

3. How do LLMs impact code review and QA processes?

Depending on the model, they act as “assistant code reviewers”, quickly flagging syntax and style issues, untested code, antipatterns, or missing documentation. LLMs promptly generate additional unit tests, uncover edge cases, and improve overall coverage without slowing down releases.

4. In what scenarios should a human override LLM-generated code?

LLMs can essentially accelerate development and improve code quality. But humans remain accountable for correctness and risk trade-offs in security-critical modules, compliance-heavy workflows, or novel architectural decisions.

5. How should teams measure the ROI of LLM integration over time?

Continuously monitor velocity (faster merges, reduced cycle time), quality (lower bug density), and satisfaction (developer feedback). Compare these before-and-after metrics across team projects to see where LLM adoption delivers tangible business impact.

Concluding Thoughts

There is no one-size-fits-all strategy for integrating LLMs into software engineering. Success depends on embedding them thoughtfully into everyday workflows, balancing automation with human oversight. The best teams don’t just adopt tools, they build practices around trust, security, and collaboration. LLMs are not here to replace engineers but to elevate them, enabling faster delivery and deeper problem-solving.