Maintaining software quality is an important step in the software development lifecycle. Low software quality can lead to high maintenance costs, unhappy users, and even system failures, ultimately eroding trust.

This is where quality metrics in software engineering become essential as they provide a structured way to assess different aspects of software, from performance to maintainability. By tracing the right metrics, teams can make data-driven improvements that enhance both the development process and the final product.

In this article, we’ll explore essential software quality metrics, their importance, and how to track them effectively. Whether you’re wondering how to measure software quality or looking for practical ways to implement software implementation metrics, understanding and applying the right metrics will provide you with a solid foundation to enhance your software quality strategy.

What Are Software Quality Metrics?

Software quality metrics are a specific type of software metrics that emphasize the quality of the product, process, and project. They are more directly linked to process and product metrics than to project metrics. These include performance, security, and maintainability.

There are different categories of metrics, such as:

- Product metrics assess the quality of the final product by looking at factors like reliability, security, and ease of use.

- Process metrics focus on how effective the development process is, tracking aspects such as code change frequency, defect rates, and team productivity.

Keeping an eye on these metrics is essential for maintaining high software quality and ensuring that you meet both user expectations and regulatory standards.

Why Track Software Quality Metrics?

Monitoring software quality metrics offers several key advantages.

- Better user experience: Tracing usability and performance metrics ensures the software aligns with user expectations. This results in enhancing the user experience.

- Cost-effectiveness: Identifying and resolving issues early in development is more cost-effective than fixing bugs after the software has been released.

- Meeting compliance standards: Monitoring quality metrics is crucial for staying compliant with industry regulations.

Top Software Quality Metrics to Track

Here are some of the most important metrics in software engineering that every development team should prioritize:

1. Code Quality

Well-written code reduces the likelihood of introducing bugs into the code base. It also makes it easier for new team members to grasp and contribute to the codebase. In addition, it minimizes technical debt.

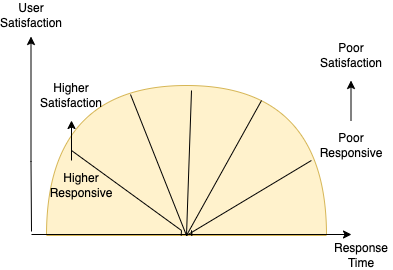

- Cyclomatic complexity: This metric indicates the code’s complexity, helping developers identify sections that may require simplification. It is widely used in code reviews, testing strategies, and assessing potential risks in codebases.

Cyclomatic complexity M would be defined as,

Image Reference: https://www.geeksforgeeks.org/cyclomatic-complexity/

- Code churn: Code churn measures the frequency of code changes. While some churn is normal, excessive changes to specific code areas can signal instability, which may require refactoring.

2. Reliability

Reliability metrics evaluate the software’s ability to function consistently over time. Reliable software is less likely to fail, which is essential for user trust and system dependability. The following are some of the methods used to ensure the reliability of software programs.

- Defect density: This metric counts the number of defects within a particular amount of code. It’s often expressed as the number of defects per module or per thousand lines of code (KLOC). Fewer defects mean better quality. Most teams use defect density to get a clearer picture of software quality.

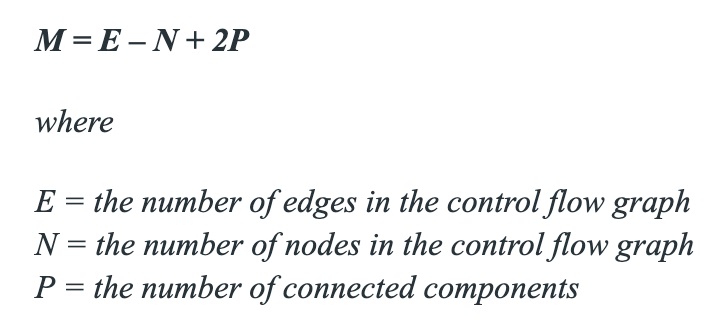

- Mean time between failures (MTBF): This measures the average operational time before a system failure occurs. Higher MTBF values indicate more reliable software.

Here is a conceptual diagram that visually represents MTBF.

Image Reference: created by the author

3. Performance Efficiency

Performance metrics assess how well the software performs under specific conditions. These metrics are crucial for applications where speed and responsiveness have a significant impact on user satisfaction, particularly in consumer-facing applications.

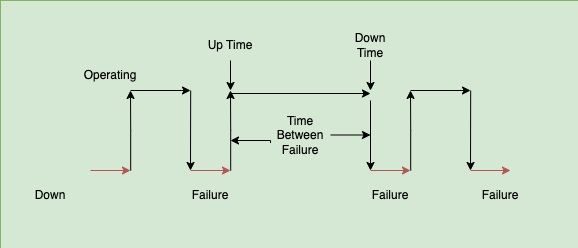

- Response time: This metric tracks how fast the system reacts to user input. Quicker response times lead to a smoother, more satisfying user experience, especially in web and mobile applications.

Here is a conceptual diagram that visually represents how response time impacts user experience.

Image Reference: created by the author

- Throughput: This tracks the number of transactions or operations the system processes within a given timeframe. This is essential for applications that handle a high volume of data or requests.

4. Security

Security metrics are critical for protecting user data and preventing security breaches. As security issues can severely impact both users and the organization, tracking these metrics is essential, especially for applications handling sensitive information.

- Vulnerability density: This measures how many security issues exist in the code compared to its size. It helps assess the overall security risk by showing how many weaknesses exist in a certain amount of code or across different parts of a system.

- Time to resolve security issues: This metric measures the average time taken to address security vulnerabilities. Lower resolution times reflect a proactive approach to security.

5. Maintainability

Well-maintained code helps control future costs and makes it easier to adapt the software to new needs. These metrics measure how easy it is for developers to update, fix, or improve the software.

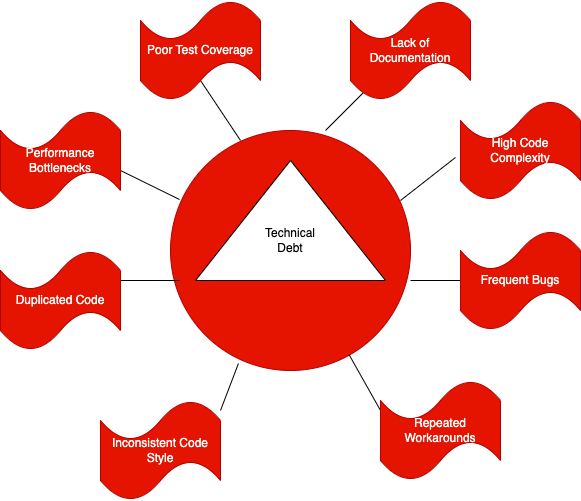

- Technical debt: Technical debt happens when development teams rush to deliver a feature or project quickly, but the code needs to be improved or fixed later. This measure looks at the time and resources needed to fix problems in the code. When technical debt is high, it can delay progress and make future updates harder, so it’s important to keep track of it.

The following diagram depicts technical debt red flags.

Image Reference: created by the author

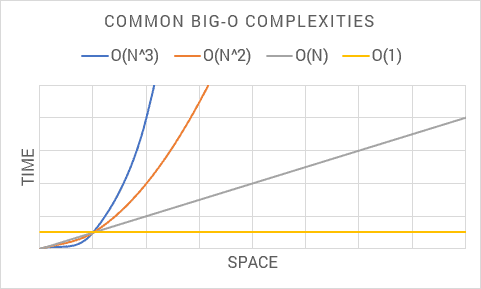

- Code complexity: Lower code complexity means the code is simpler to understand and modify. That makes it easier to manage and maintain over time. Less complex code is more adaptable to changes and reduces the likelihood of errors, improving overall maintainability. Code complexity is measured using Big O notation, which describes how the performance or resource usage (time or space) of an algorithm scales with the size of its input.

The following shows common Big O notations from an algorithmic analysis.

6. Usability

Software usability metrics are a way to measure how effective, efficient, and satisfying a product is to use. They can help designers quantify usability objectively rather than making assumptions. For consumer apps, a great user experience can determine the difference between users adopting or abandoning the product.

- User satisfaction score: This score is typically gathered through surveys or feedback forms, helping to measure how happy users are with the software.

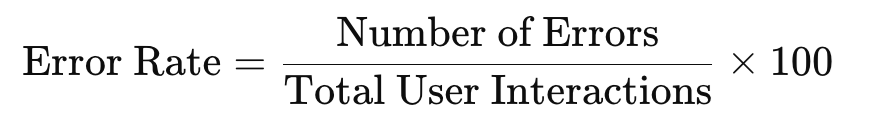

- Error rate: By tracking the errors users make, this metric helps pinpoint confusing or poorly designed features, providing valuable insights into where usability can be improved. It can be calculated using the formula below.

Image Reference: created by the author

7. Test Coverage

Test coverage metrics are qualitative indicators that ensure every aspect of the software is thoroughly tested for quality and reliability. They track how much of the application has been covered by the testing suite during development. These Agile metrics help development teams create a high-performing product that meets user expectations. Higher test coverage often correlates with fewer bugs reaching production.

- Unit test coverage: Measures the percentage of code covered by unit tests, helping to ensure that individual functions work as expected.

- Functional test coverage: evaluates how well a software application’s functional requirements have been tested, ensuring all specified features are validated. This metric helps identify any gaps or missed functionalities before the software is deployed.

8. Customer Satisfaction

Customer satisfaction metrics indicate how users perceive the software’s quality. This metric helps measure how happy and satisfied customers are with a product or service. Some examples of these metrics include:

- Net promoter score (NPS): This metric indicates how likely users are to recommend the software to others. It directly reflects user satisfaction. The higher the NPS, the more satisfied the users are. That means users are more likely to promote the product.

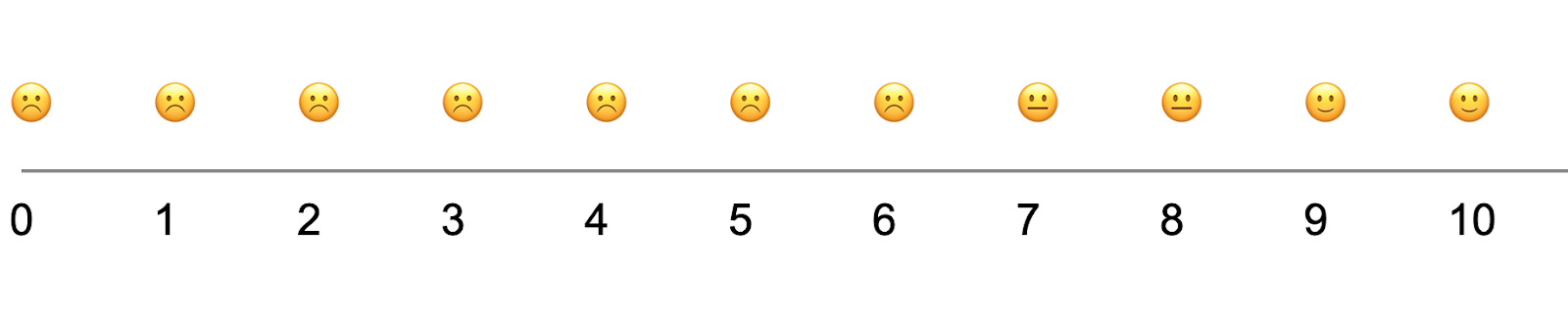

In NPS, customers are categorized into three groups based on their response to the question, “How likely are you to recommend our product or service to a friend?”

Respondents rate their answers on a scale from 0 to 10, and their score determines their category.

- 0-6: Sad faces represent detractors.

- 7-8: Neutral faces represent p

- 9-10: Happy faces represent p

Image Reference: created by the author

- Customer support tickets: Tracking support tickets helps identify common issues and provides insights into areas needing improvement.

9. Compliance

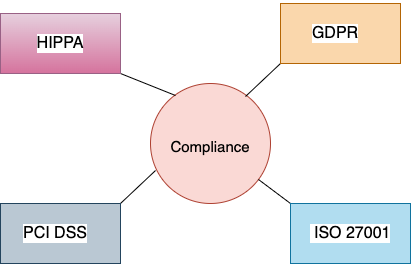

In industries with strict regulations, compliance metrics are essential to ensure that software meets the necessary standards and follows legal requirements. These metrics help verify that the software meets all necessary regulatory requirements, reducing the risk of legal issues and ensuring its proper operation within regulated environments.

- Audit trail completeness: Ensures that every user action is logged and traceable, which is crucial for security and compliance with legal regulations.

- Regulatory compliance: Ensures the software adheres to industry rules and legal requirements.

The image below illustrates some of the key compliances that certain industries must adhere to.

Image Reference: created by the author

GDPR: General Data Protection Regulation

HIPAA: Health Insurance Portability and Accountability Act

PCI DSS: Payment Card Industry Data Security Standard

ISO 27001: International standard for information security management

Best Practices for Tracking and Using Software Quality Metrics

Effectively tracking quality metrics software teams depend on requires a strategic approach.

- Use real-time monitoring: Helps to manage operations in advance, improving decision-making and offering quick insight into important events. Watching software performance in real-time helps teams spot and fix problems quickly, leading to more stable software and a smoother experience for users.

Below is an image of the Grafana dashboard, which is one of the monitoring tools available.

Image Reference: https://grafana.com/oss/grafana

- Regularly review metrics: Continuously tracking metrics over time helps teams identify patterns or trends that may not be apparent in short-term data. This lets them make better decisions and changes to improve the software. Hold weekly trend reviews for defect density, deployment frequency, and rollbacks. Each quarter, inspect lagging outcomes like NPS, MTBF, and technical debt to guide roadmap investment.

- Combine metrics with feedback: Metrics provide useful numbers, but adding user feedback gives you a clearer picture of the software’s quality. User feedback helps make sense of the data and makes sure the software meets users’ needs.

- Prioritize what matters: Connect each metric to a real business or user goal. Pick one north-star metric and two or three leading indicators that affect Review the list every three months and remove any metrics that are no longer helpful.

- Align stakeholders: Create a shared glossary so everyone (technical and non-technical) understands each metric. Define thresholds together during roadmap planning. Share dashboard snapshots during sprint reviews so stakeholders stay aligned.

- Automate & integrate: Embed static-analysis tools (e.g., SonarQube, Semgrep) in pull requests. Stream logs, traces, and custom metrics (via OpenTelemetry) into your observability stack (e.g., Grafana, Datadog). Automation keeps quality checks consistent and cuts manual work.

- Iterate continuously: When a metric breaches its threshold, treat it as a learning opportunity, don’t blame. Do blameless post-mortems, use feature flags for gradual rollout, and verify data integrity after major refactors. Periodically clean out stale metrics to keep your dashboards sharp.

Choosing the Right Metrics for Your Project

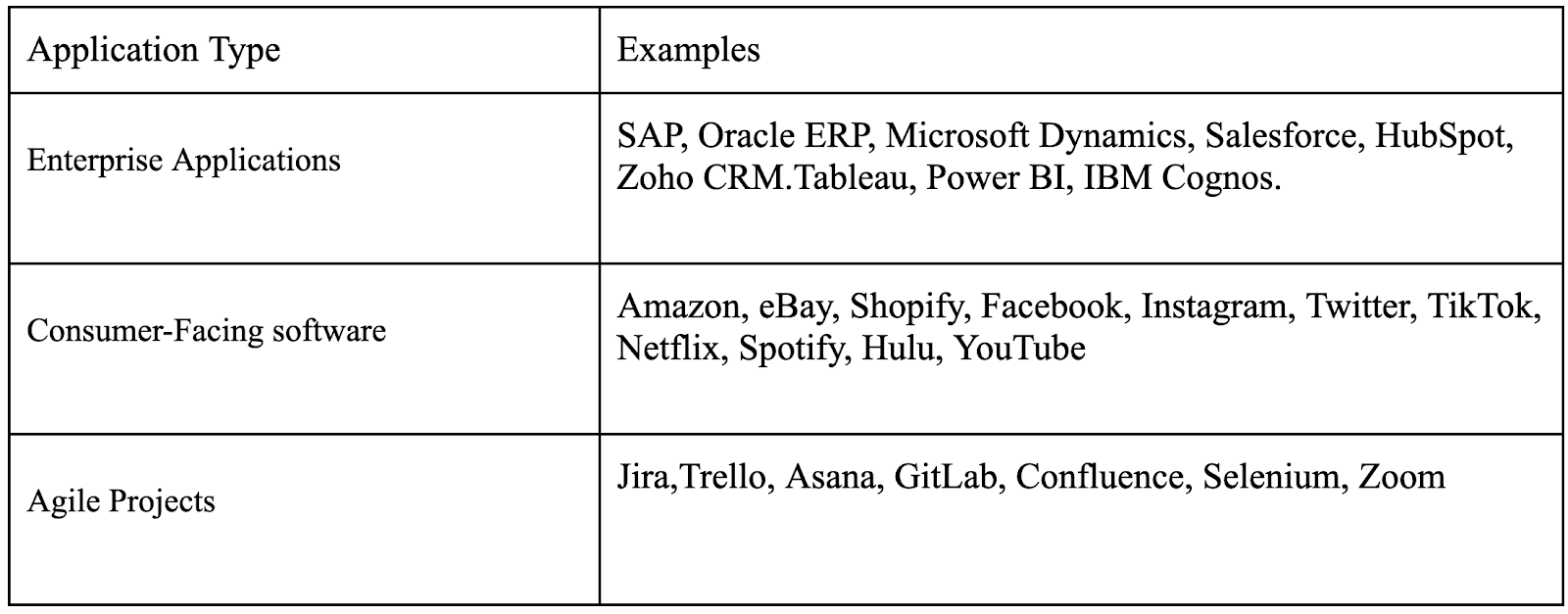

Not all metrics work for every project. When selecting quality metrics in software engineering, it’s important to choose measures that align with the type of software and the specific goals of your project.

- Enterprise applications: For complex software used by large organizations, focusing on reliability, maintainability, and compliance is key.

- Consumer-facing software: Prioritize usability, performance efficiency, and customer satisfaction, as these impact the user experience.

- Agile projects: Agile projects benefit from metrics that support iterative improvements, such as code quality and defect density.

Consider your software’s purpose, industry, and audience when selecting metrics to ensure they provide relevant insights.

Tools for Tracking Software Quality Metrics

Below is a quick tour of popular platforms that help automate measurement and keep quality front-of-mind. Each solves a different slice of the problem; many teams combine several.

- SonarQube / SonarCloud: Industry-standard static-analysis tool that analyzes pull requests, flags code smells, and tracks technical-debt trends.

- New Relic: Cloud-based APM with live response-time, throughput, and error-rate views; strong distributed-tracing support.

- Datadog: Unified metrics, logs, traces, and synthetic monitoring in one SaaS platform; great for alerting on service-level objectives.

- Grafana + Prometheus: Open-source combo. Prometheus scrapes metrics, which Grafana then visualizes with flexible dashboards and alert rules.

- Sentry: Real-time error-tracking and performance-monitoring tool focused on client and server events; excellent for measuring release health.

Conclusion

Tracking the right metrics for software quality is essential for creating reliable, efficient, and user-friendly software. By focusing on areas like code quality, user satisfaction, performance, and security, teams gain clear insights to guide improvements and deliver high-quality products.

Making sure these metrics match your project’s goals helps keep your efforts focused and efficient, improving the overall quality strategy. For instance, consumer apps with large user bases may prioritize usability and performance, while enterprise software may focus more on compliance and maintainability.

Incorporating these metrics into the software development lifecycle enables continuous improvement. Regular evaluation provides data that inform iterative updates and proactive solutions, helping your team address issues early and adapt to evolving user needs. This approach not only meets user expectations but can exceed them, creating software that stands out for its quality and dependability.