AI tools today can easily write tests, explain code, draft functions, and even build whole projects. But they don’t replace strict engineering practices; they are changing the way people work every day.

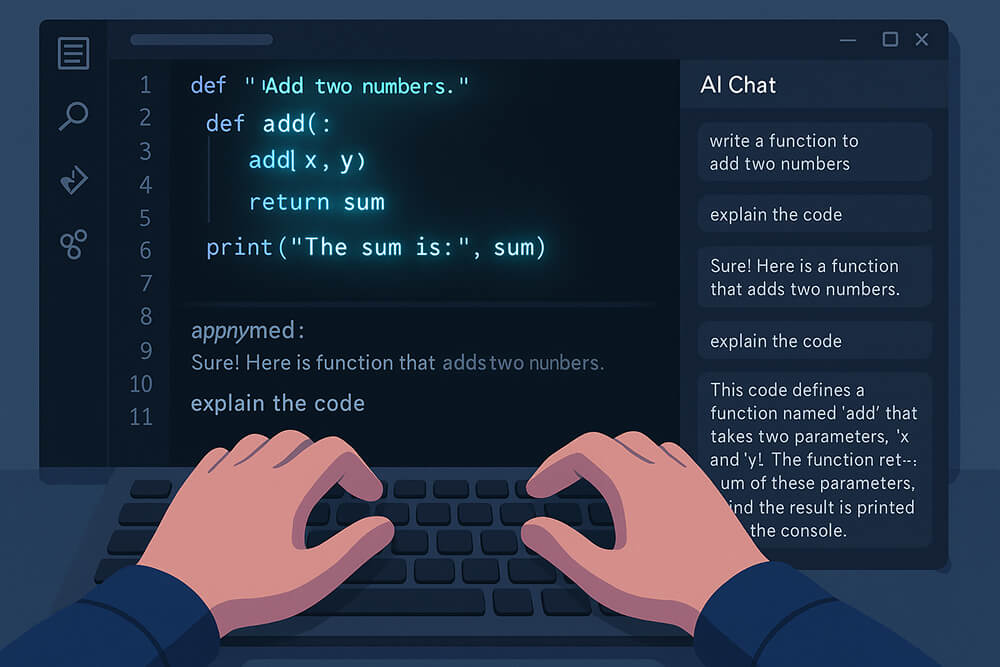

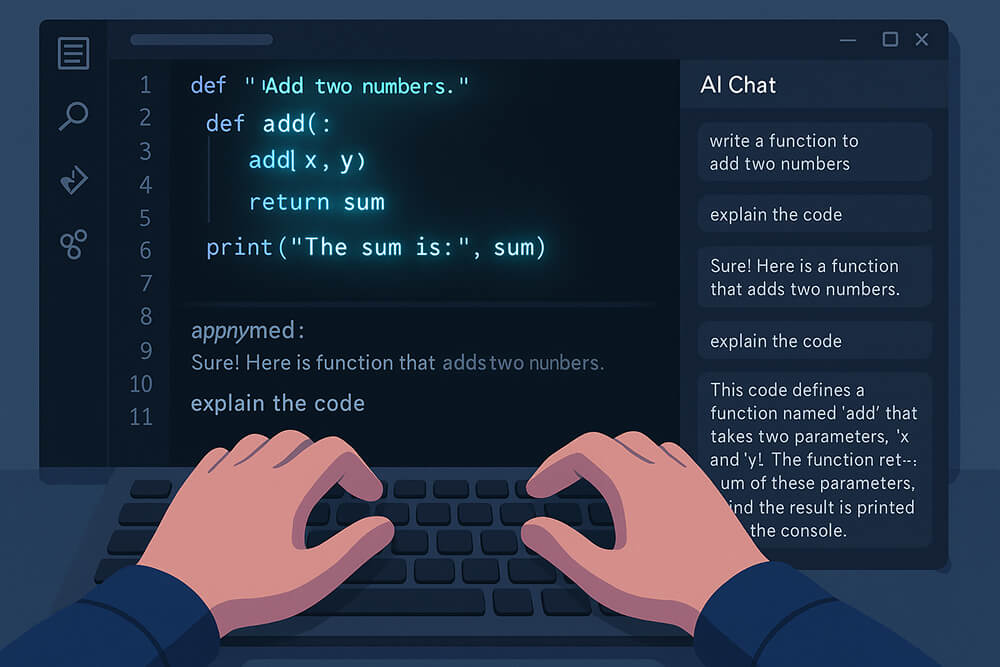

1. Inline Coding Assistants

These tools sit inside IDEs like VS Code, JetBrains, or your terminal and suggest code as you type. They’re the closest thing to real-time AI coding.

- GitHub Copilot: Strong autocomplete, chat, and refactor help across many languages.

- Amazon CodeWhisperer: Good for AWS workflows. Can suggest code tied to AWS services and offers security scans on suggestions.

- JetBrains AI Assistant: Built into IntelliJ, PyCharm, and others; adds refactors, doc blocks, and issue explanations.

- Tabnine and Codeium: Developer-friendly, fast autocomplete. On-prem options for teams with strict data controls.

- Cursor, Replit AI: Editor + assistant hybrids that emphasize chat-driven changes across a project.

Where they shine:

- Boilerplate helpers and utility functions

- Regexes, docstrings, and quick patterns

- Writing or updating unit tests

Make sure to treat these suggestions like a junior pair-programmer: review, run tests, and check for subtle logic defects.

2. Chat Copilots for Repositories and Docs

These assistants index your entire repository (plus any linked docs) so you can talk to the codebase itself. Ask, “Why does this call time out in prod?” and they jump to the files, trace dependencies, and draft a patch.

- Sourcegraph Cody: Good at reasoning with multiple files and searching for code.

- Codeium Chat, Copilot Chat, Cursor: Project-aware chat tools that let you make changes directly in the chat and prepare for PR.

Where they shine:

- Mapping unfamiliar code paths and data flows

- Refactors that span many files

- Migration guides and design-level summaries for the team

However, good answers depend on context windows. So, make sure the assistant can read the right folders, dependency files, and docs.

3. Quality, Security, and Test Generation Tools

These tools blend AI code generation with static analysis, so you get code that meets quality gates and security policies. Not just code that compiles.

- Sonar (SonarQube/SonarCloud + AI): Gives you automatic explanations and suggestions for changes that are in line with quality gates.

- Snyk (AI Fix), Semgrep Pro, GitGuardian: Security-focused suggestions for vulnerable patterns, secrets, and misconfigurations.

- Diffblue Cover (Java): Creates high-coverage unit tests from existing code.

Where they shine

- Lifting baseline code quality across the repo

- Catching insecure patterns early in the PR flow

- Seeding unit or property-based tests that you can extend

Always review the AI’s security fixes, especially around input sanitization, auth flows, and cloud IAM, before merging.

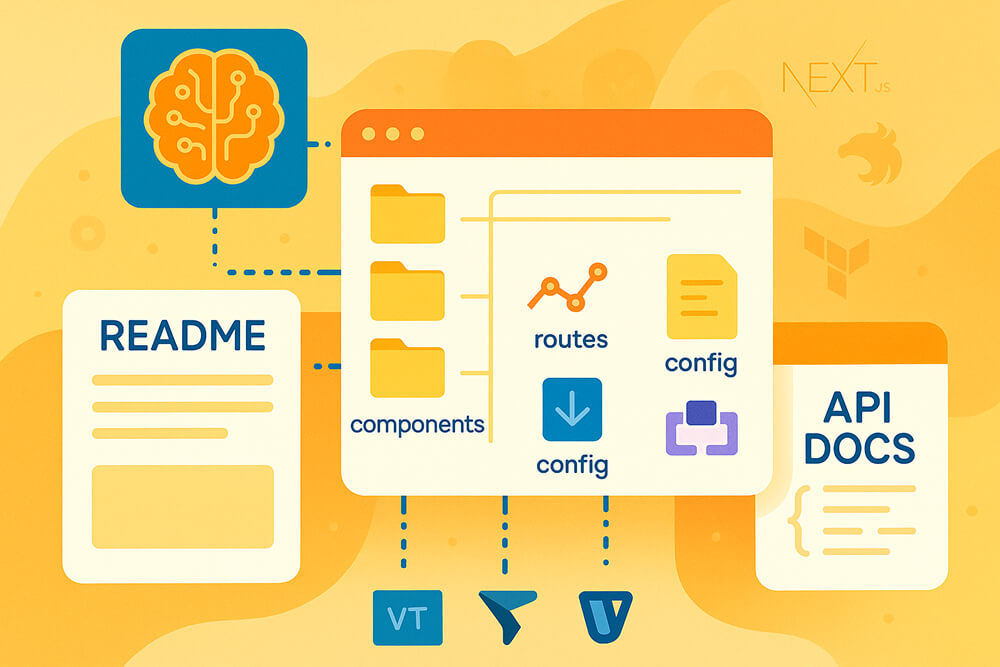

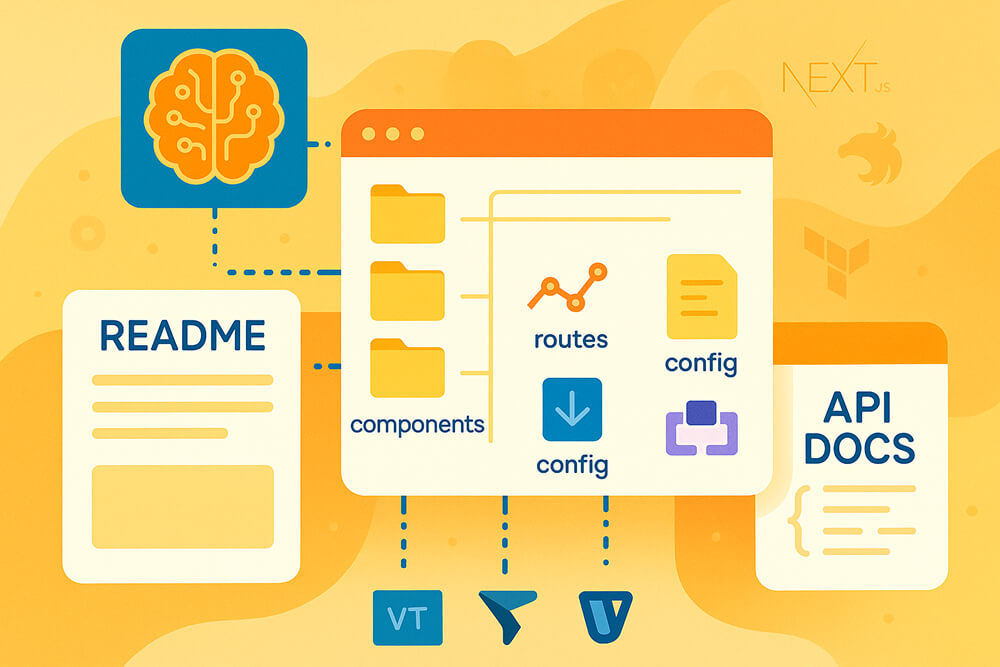

4. Framework and Project Scaffolding Tools

These tools make projects that are ready to go with routes, models, configuration, and infrastructure templates, so you don’t have to start from scratch.

- Framework CLIs + AI prompts (Next.js, Remix, NestJS, Spring Initializr): Answer a few questions and get a repo with linting, testing, and build scripts wired up.

- Terraform/cloud assistants (AWS, Azure, GCP): Draft infrastructure modules, policies, and pipelines in HCL or YAML.

- IBM watsonx Code Assistant is aimed at businesses and is great at making Ansible playbooks and updating old workloads.

Where they shine

- Greenfield prototypes, hackathons, and internal tools

- Standardized templates that match your org’s conventions

Decide on lint rules, test harnesses, and folder structure first. If you leave them undefined, the AI will invent its own, and you’ll pay for the cleanup later.

5. Documentation and Learning Companions

These tools, while not strictly AI code generation tools, save hours of work by generating READMEs, ADRs, code comments, and “how it works” walkthroughs for new teammates.

- Copilot/Codeium/Cody: Convert code into summaries and usage notes.

- Mintlify AI: Generates structured project docs and API references.

- Notebook assistants (Jupyter, Colab): Explain cells, add narrative, and suggest next steps for data science work.

Where they shine

- Explaining legacy areas in plain language

- Turning one-off Slack answers into durable docs

- Keeping READMEs and ADRs synced with code changes

Always verify technical accuracy and remove any secrets or private data before publishing.

How to Choose the Best AI Code Generation Tool

Check this short checklist before you make a decision:

- Languages & frameworks: Confirm the tool supports your runtime, build system, and test runner.

- Context depth: For inline tools, check the token window size; for repo copilots, see how many files they index, how often they refresh, and whether they handle monorepos or linked docs.

- Privacy & Compliance: Review training limits, PII handling procedures, on-premises or private cloud options, and audit logs.

- Security posture: Look for secret scanning, license checks, dependency insight, and policy enforcement.

- Integrations: IDE plug-ins, CI/CD hooks, ticketing (Jira), Git providers, and SSO.

- Latency & reliability: Fast completions matter; slow tools get disabled.

- Cost & licensing: Seat versus usage pricing, plus extra fees for long context windows, RAG, or custom models.

- Admin controls: Model selection, domain knowledge bases, prompt/response logging, redaction, and fallbacks.

Bottom line

There’s no single “best” tool for everything. So, map tools to tasks: inline assistants for rapid snippets, repo-aware copilots for cross-file refactors, quality-and-security analyzers to keep standards high, and scaffolding or doc generators to speed setup and knowledge-sharing.

Begin with a focused pilot, one team, and a few well-defined use cases. Track metrics such as review time saved and defect reductions, refine guardrails, and then roll out the winners more broadly.