Artificial intelligence (AI) is no longer an experiment sitting at the side of engineering. It’s now part of everyday work, writing code, assisting with reviews, running tests, and even shaping planning. As more teams adopt these tools, the question is not how we use AI, but how we measure if it is truly making things better.

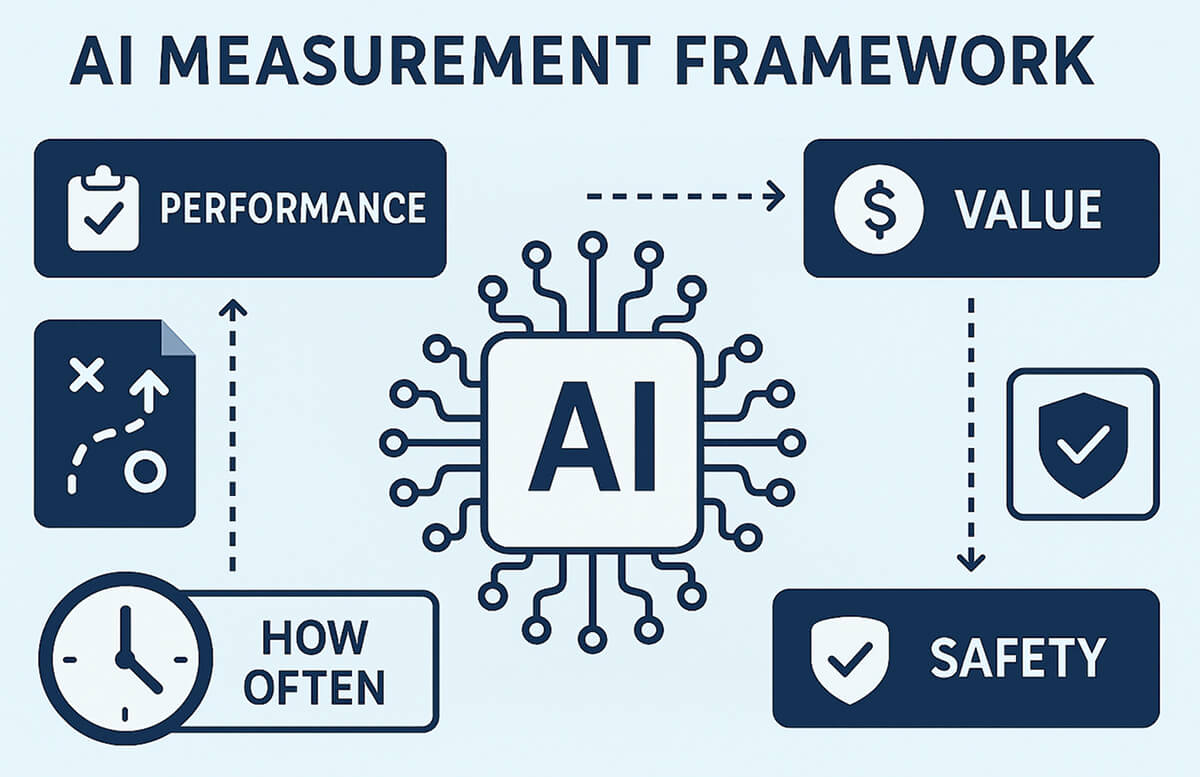

An AI measurement framework provides teams with a structured approach to answering this question. It helps check whether AI tools are creating real value, highlights risks that may be hidden, and ensures improvements are sustainable.

What is an AI Measurement Framework?

An AI measurement framework is a structured approach to evaluating how AI tools or models perform, the value they bring, and their safety. It provides a clear map of what to measure, how often, and why.

Core components

- Metrics: indicators like accuracy, latency, fairness, or cost that show how the system is performing.

- Benchmarks: standard reference points, such as public datasets or industry norms, used for comparison.

- Evaluation methods: experiments, monitoring systems, or controlled rollouts.

Types of metrics

- Performance metrics: accuracy, recall, latency, precision, throughput.

- Fairness metrics: demographic parity, equalized odds, subgroup bias.

- Robustness metrics: resilience against adversarial inputs or noisy data.

- Explainability metrics: clarity on why a model made its decision.

- Efficiency metrics: memory, runtime cost, or scaling ability.

Examples in practice

- MLPerf (industry-standard benchmarks for AI performance).

- Fairlearn: Microsoft’s toolkit for checking fairness across groups.

- Explainable AI tools: SHAP or LIME.

- DX framework: measures AI adoption and impact through utilization, productivity, and cost.

- LinearB approach: ties AI measurement directly to throughput and quality outcomes.

Best Practices for Using an AI Measurement Framework

1. Define clear objectives

Measurement without direction often creates noise. Before choosing any metrics, define what the organization really wants from AI.

- Identify KPIs early: for example, reducing the rework rate by 15% or shortening the PR merge time.

- Link to business goals: a medical AI may aim for safety and recall, while an e-commerce recommendation system might optimize for click-through.

- Align with compliance: many industries now require explainability or audit trails.

A common mistake is starting with metrics before setting goals. Teams sometimes measure everything, from latency to storage usage, but when asked, “Which of these actually matters for the product?” they struggle to provide an answer.

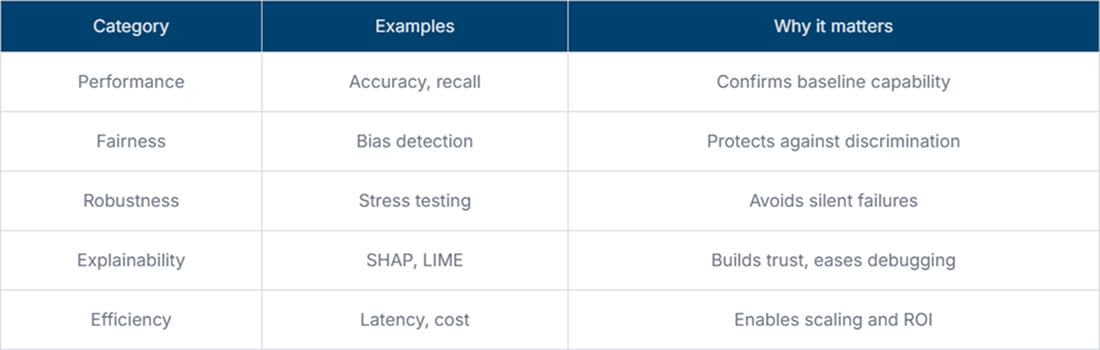

2. Use multi-dimensional metrics

Over-optimizing a single metric, such as accuracy, can backfire. AI that looks perfect in one dimension may fail in another.

- Balance accuracy with fairness: don’t allow a boost in speed if it harms minority groups.

- Add interpretability: use SHAP or LIME to expose model reasoning.

- Track robustness: simulate noisy inputs to test resilience.

Some teams measure too many dimensions without weighting them. This dilutes focus. The trick is to balance breadth with relevance: 4-6 strong, well-defined metrics are better than 20 half-baked ones.

Example metric categories

3. Ensure transparency and documentation

Engineers must document not just outcomes but also assumptions, data sources, and limitations. Without this, reproducibility decreases, and teams lose trust.

- Data logs: track how datasets are collected.

- Config files: store parameter settings for future replay.

- Caveats: openly note what the system cannot handle.

Transparency is also important for regulatory and customer trust. Try explaining to a customer why their loan was turned down by an AI system. If there’s no documentation, you can’t prove whether the decision was fair or if bias slipped in.

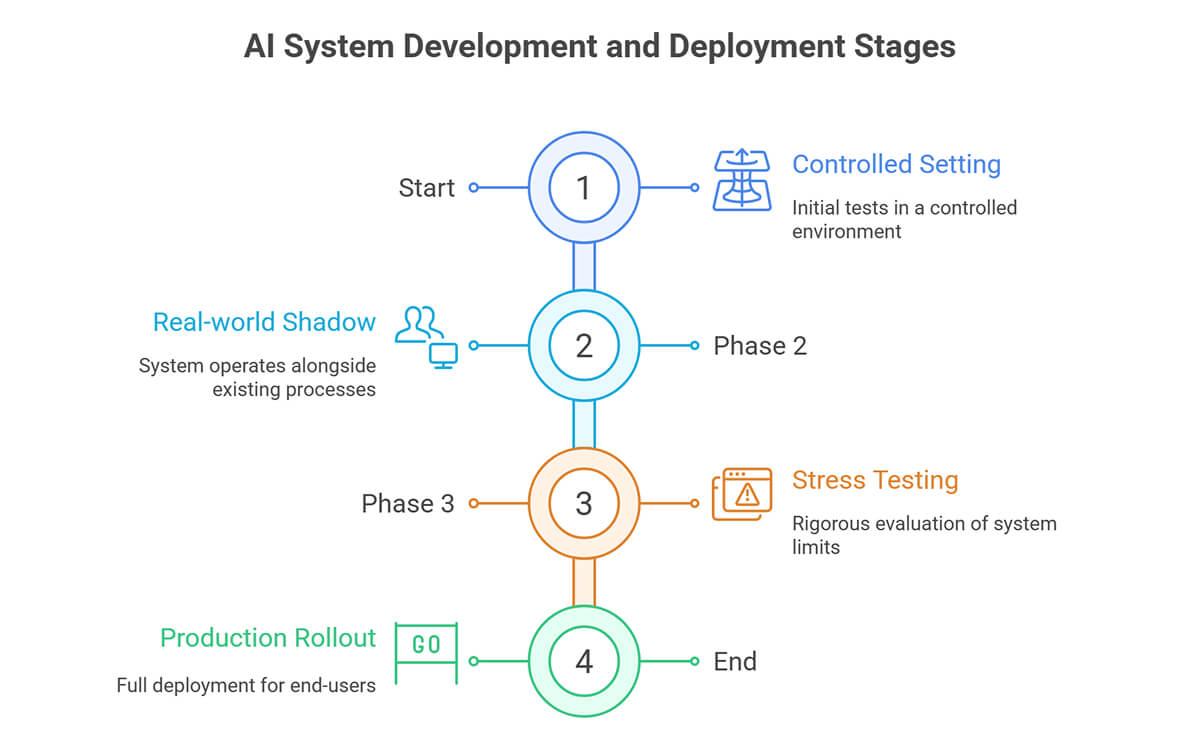

4. Test across diverse scenarios

Models tested only in clean lab settings rarely survive real-world use.

- Real-world simulation: shadow deployments or canary releases.

- Edge cases: rare user behaviors or unexpected data formats.

- Stress testing: simulate sudden load or corrupted inputs.

Extra tip: Some teams run “red-team” tests, intentionally feeding malicious or nonsensical prompts to see how the AI breaks. This type of testing has uncovered vulnerabilities in large models that traditional QA never detected.

5. Monitor continuously

AI is not static. Data drift and model decay are common. Continuous monitoring is just as critical as initial testing.

- Build pipelines: integrate checks into CI/CD.

- Alerts: trigger notifications on metric violations.

- Feedback loops: retrain models automatically with new data.

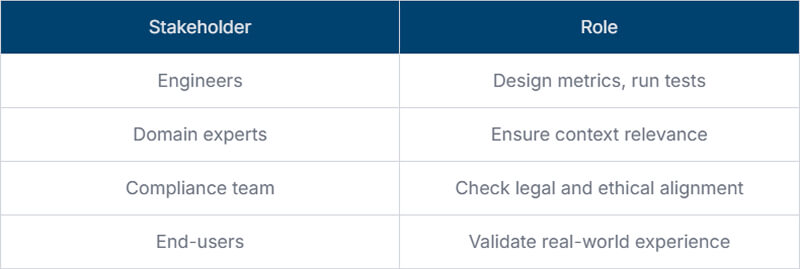

6. Engage stakeholders

Effective measurement is not only for engineers. A diverse set of voices ensures balanced outcomes.

- Technical teams: implement metrics.

- Domain experts: validate correctness in context.

- Compliance officers: ensure regulations are met.

- End-users: provide ground truth on usability and fairness.

Stakeholder involvement

7. Update frameworks regularly

AI risks and tools evolve quickly. A framework designed two years ago may miss new challenges.

- Track emerging risks: like LLM hallucinations or prompt injection.

- Adapt to regulations: such as EU AI Act, NIST AI RMF.

- Evolve benchmarks: replace outdated datasets with newer, more representative ones.

Teams sometimes stick with old benchmarks simply because they’re familiar. The problem is that outdated benchmarks can create an overly positive picture of a model, giving a false sense of confidence.

Implementation Lessons from Industry

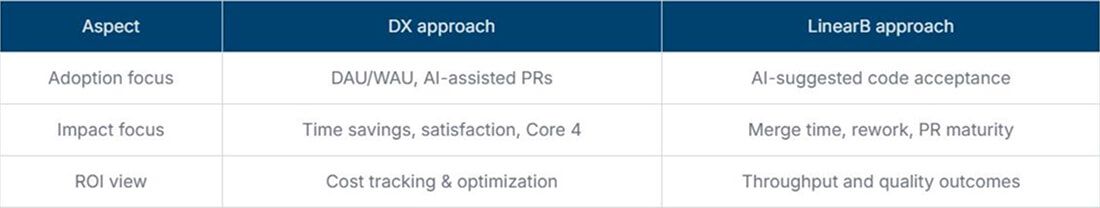

Many organizations have already built working measurement frameworks. Two leading approaches stand out: DX and LinearB.

- DX focuses on three dimensions: utilization, impact, and cost.

- LinearB emphasizes throughput and quality.

Practical steps for teams

- Record a “before” snapshot: log key KPIs (cycle time, defect rate, etc.) before any AI tool is enabled.

- Mark AI-assisted commits: add a tag (e.g., AI-Generated: true) to every Git commit or pull request touched by AI.

- Mix hard data with feedback: pair telemetry (speed, quality, cost) with short developer surveys.

- Review in normal ceremonies: surface AI metrics during retros, QBRs, and planning sessions.

- Grow in stages: start with adoption stats, then correlate to output, and only later build full ROI models.

Common Challenges in Building Measurement Frameworks

- Data drift: changing inputs can quietly degrade model accuracy.

- Tool sprawl: too many AI tools create scattered, hard-to-compare metrics.

- Cultural pushback: some engineers view measurement as micromanagement.

- Hidden overhead: logging and storage costs rise if the framework isn’t kept lean.

Overcoming these requires both technical and organizational discipline.

Future Outlook

AI measurement will not stop at simple metrics. Next-generation frameworks will likely include:

- Predictive monitoring: spotting issues before they occur.

- Integration with DevOps: AI quality metrics inside CI/CD pipelines.

- Cross-tool attribution: unified visibility across IDEs, CLI agents, and autonomous systems.

- Ethical scoring: frameworks that not only measure technical performance but also track trustworthiness and societal impact.

- Self-healing models: systems that detect when they’re drifting and trigger retraining automatically.

Just as DevOps pipelines became standard for software delivery, these frameworks may become a baseline expectation for any engineering team deploying AI at scale.

FAQ

What metrics should an AI measurement framework track beyond accuracy?

It should track fairness, robustness, interpretability, efficiency, and business impact. Accuracy alone rarely reflects overall reliability.

How often should organizations review or update their code quality measurement framework?

Review the framework quarterly to catch changing trends and ensure metrics remain aligned with business goals. Run an ad-hoc review sooner if a new regulation, major risk, or new AI tool appears. Do a deeper, full-scale assessment once a year to refresh benchmarks and governance.

Can a measurement framework be applied across various AI use cases, such as performance and data quality?

Yes. A single framework can cover multiple AI use cases, such as model performance, data quality, fairness, and cost. Because the structure (goals → metrics → monitor → feedback) stays the same, you simply plug in different metric sets: accuracy and latency for performance; completeness and freshness for data quality. Using a single framework avoids tool sprawl, maintains consistent dashboards, and enables teams to see trade-offs in one place.

What are common pitfalls to avoid?

- Over-optimizing one metric

- Ignoring edge cases

- Forgetting documentation

- Excluding stakeholders

How do you balance automation versus human oversight?

Automated monitoring handles routine checks. Humans step in for complex cases, ethical tradeoffs, or unusual anomalies.

Does measurement slow down engineering?

If done poorly, yes. But frameworks designed with automation in mind actually save time by catching issues earlier and preventing costly rework.

What role do regulators play in measurement?

Regulators set the rules for AI fairness, safety, and explainability. A measurement framework provides the audit trails, metrics, logs, and documentation that demonstrate your models comply with those rules. This helps avoid fines and deployment delays.

What tools can engineers use to start quickly?

Popular options include MLFlow for experiment tracking, Evidently AI for monitoring, Fairlearn for fairness metrics, and open benchmarks like MLPerf.

Conclusion

The role of a measurement framework in AI engineering is straightforward but critical. It sets the foundation for building systems that are reliable, explainable, and ethical.

Good practice makes a difference. Clear objectives, a mix of metrics, proper documentation, testing in different scenarios, ongoing monitoring, stakeholder input, and regular updates reduce risk and bring out the best in AI.

On the other hand, the stakes are high. AI decisions are no longer abstract; they affect products, services, and people. That is why engineers and senior engineers need to view measurement frameworks not as add-ons, but as a core part of engineering practice.