Copilot adoption usually starts the same way as any other tool. A few engineers try it, then it rolls out to the entire team, and within weeks, you hear, “The development got faster than ever before.” That’s a reasonable first signal, but it’s not a measurement strategy.

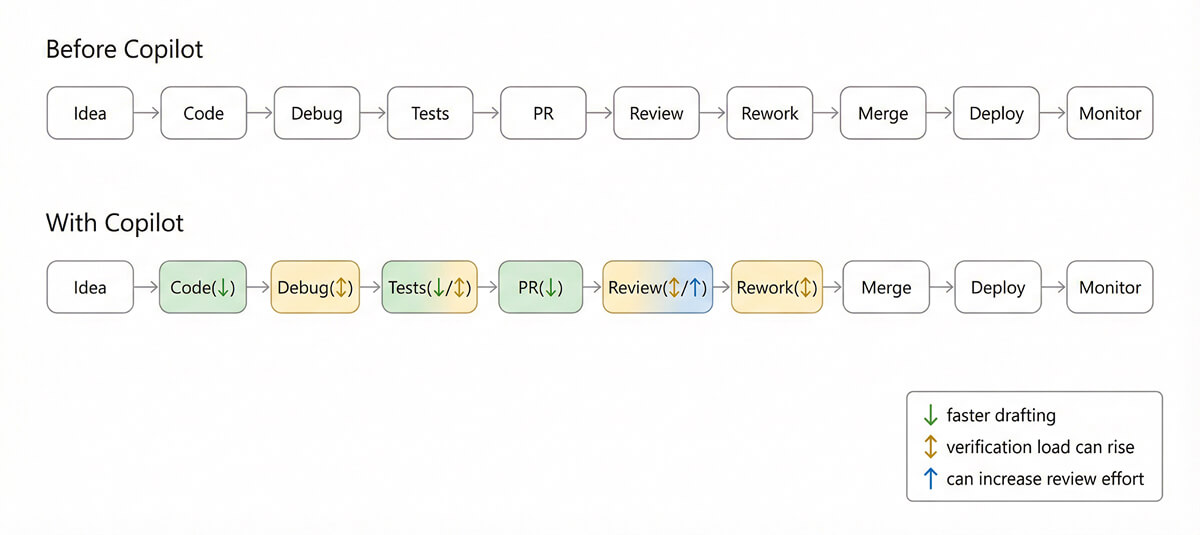

The tricky part is that AI-assisted development can boost speed in one place while quietly adding drag elsewhere. Faster scaffolding might be offset by more review time. It’s not uncommon to see many file changes in a single PR these days when AI assistance is used. On the other hand, more code shipped may come with more rework. Or the opposite. Maybe you ship the same amount, but quality increases because engineers spend more time improving tests and observability.

This is why teams should measure Copilot’s impact as a system change, not a personal preference. You’re not just asking, “Do developers like it?” You’re asking what the AI impact on software development looks like in your environment across delivery flow, quality, and outcomes. GitHub has also started adding first-party visibility here, including a Copilot usage metrics dashboard and API for enterprises (currently in public preview).

Redefining productivity in the AI era

If your definition of productivity is “more lines of code,” AI will fool you immediately. Copilot can generate syntactically valid code quickly, but raw output is a weak proxy for value.

A better definition is: How effectively a team turns intent into reliable change. That includes three layers:

- Flow: How smoothly work moves from “planned” to “running in production.”

- Quality: Whether those changes behave correctly, stay maintainable, and don’t raise operational risk.

- Outcomes: Whether the work actually advances product goals (customer value, revenue, cost reduction, compliance, etc.).

This matters because Copilot can improve flow by removing friction (e.g., boilerplate and repetitive refactorings), but it can also increase the review and verification load if suggestions are accepted too quickly. Research shows meaningful speedups for certain tasks, but in real-world settings, progress may slow down if review and correction overhead dominate.

That’s why the goal isn’t to prove Copilot works in general, but to measure AI coding tool productivity in your environment as a system outcome, how work moves from intent to production, how stable it stays after release, and whether quality and operational load improve or degrade over time.

Where Copilot changes the development workflow

Copilot’s effects usually show up in a few predictable places:

- Coding and scaffolding: Generating functions, tests, adapters, data models, regex, and quick refactors.

- Context switching: Fewer trips to docs/Stack Overflow for routine patterns, more time staying “in flow.”

- PR preparation: Clearer summaries, faster cleanup, more consistent formatting.

- Review and safety work: More time verifying logic, edge cases, security, and consistency with system architecture.

- Learning curve (especially for juniors): Quicker ramps into unfamiliar libraries when guardrails are in place.

The point of measuring is to find which arrows changed for your teams, and whether the overall system improved.

Metrics that capture Copilot’s true impact

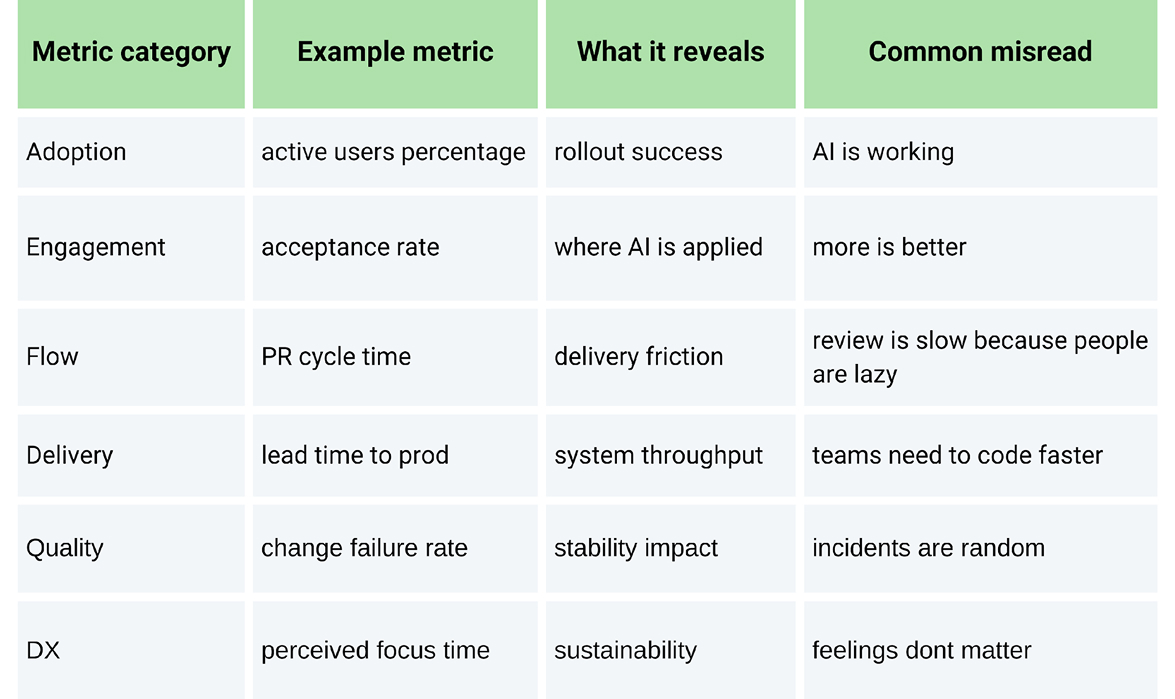

Think in four buckets: adoption, delivery, quality, and developer experience. The best programs track all four, because any one bucket can lie on its own.

1. Adoption (useful, but not sufficient)

These tell you whether Copilot is being used, where it’s used, and how consistently. GitHub’s Copilot metrics endpoints (and the enterprise dashboard) report usage trends, including active and engaged users and breakdowns by editors, languages, and features.

Helpful engagement signals:

- Active users vs. engaged users (adoption vs. meaningful use)

- Suggestion acceptance and “lines accepted” (directional, not a KPI)

- Copilot Chat usage and insertion/copy events (helps detect “ask vs. apply” patterns)

Watch out: More acceptance is not automatically “better.” In some teams, higher acceptance can correlate with lower review discipline.

2. Delivery and flow metrics (the real heartbeat)

This is where your “system” metrics live-what executives care about and what engineers feel day to day. Use proven delivery performance metrics as anchors. DORA’s metrics are widely used because they correlate with broader organizational outcomes, and DORA has evolved its guidance over time (including a shift toward a five-metric model).

Core flow metrics to track:

- Lead time for changes (commit → production).

- Deployment frequency.

- Change failure rate.

- Deployment failure recovery time and time to restore.

Then add team-level flow detail:

- PR cycle time (open → merge).

- Review pickup time (PR opened → first review).

- Rework rate (new commits after review requested).

- Work-in-progress (WIP) and queue time (where work waits).

This is where developer productivity metrics stop being vanity metrics and become decision-grade. They show whether Copilot improves throughput, reduces wait time, or simply shifts effort from coding to review.

3. Quality metrics (guardrails against “fast wrong”)

Quality metrics should primarily answer the following two questions:

- Are we creating more defects?

- Are we creating more future work?

To measure these, you need to track metrics such as defects escaped to production (by severity), incident frequency tied to recent changes, post-merge reverts/hotfix rate, and test coverage movement (if meaningful in your context). It also includes security findings and dependency risks (especially if Copilot accelerates library adoption).

4. Developer experience (what humans feel, without trusting vibes alone)

Developer experience matters because “felt productivity” affects morale and retention, but it needs to be structured and can be measured by a lightweight pulse check (monthly or quarterly) asking:

- Can I get into deep work without interruption?

- Does code review feel manageable?

- Do you trust the codebase’s direction and standards?

- Whether Copilot helps to learn faster and reduce grunt work.

GitHub and others have published research linking Copilot to perceived productivity and developer happiness, but again, your environment is what matters.

Designing a measurement framework (that doesn’t collapse in weeks)

A measurement framework should support a wide range of teams with different repositories, stacks, seasonal work, and shifting priorities.

Step 1: Establish a baseline (without waiting months)

Pick a baseline window that matches your delivery rhythm:

- 4-8 weeks pre-rollout for fast-moving teams.

- 8-12 weeks for slower-release trains.

Capture baseline medians (not just averages) for flow and quality metrics. You want to compare distributions and not just a single number.

Step 2: Segment before you compare

Avoid treating Copilot vs. no Copilot as a single big bucket for evaluation. You can segment by:

- Team (domain context).

- Repository maturity (greenfield vs. legacy).

- Work type (new features vs. bugfixing vs. refactoring).

- Different experience levels.

- Programming languages and toolchains.

Step 3: Combine telemetry, delivery data, and human signals

You’ll typically pull from:

- GitHub Copilot usage metrics.

- Git hosting PRs, merges, and review timelines.

- Issue trackers cycle time from “in progress” → “done”.

- CI/CD build times, failed deployments.

- Incident tooling, MTTR, on-call load.

Step 4: Define “guardrails” up front

Decide what must not get worse while chasing speed:

- Change failure rate.

- P0/P1 incidents.

- Security findings.

- Review overload (e.g., sustained review pickup time regression).

If a “productivity gain” violates guardrails, it’s not a gain.

Example: Pull Copilot org metrics

# Example: GitHub REST API call (org-level Copilot metrics)

# Docs: GitHub "Copilot metrics" endpoints (preview availability varies by plan/policy).

# Requires an access token with appropriate permissions.

curl -L \

-H "Accept: application/vnd.github+json" \

-H "Authorization: Bearer $GITHUB_TOKEN" \

-H "X-GitHub-Api-Version: 2022-11-28" \

https://api.github.com/orgs/YOUR_ORG/copilot/metricsThe response can include breakdowns for IDE completions and chat usage, which you can aggregate into weekly trends and correlate with delivery and quality outcomes.

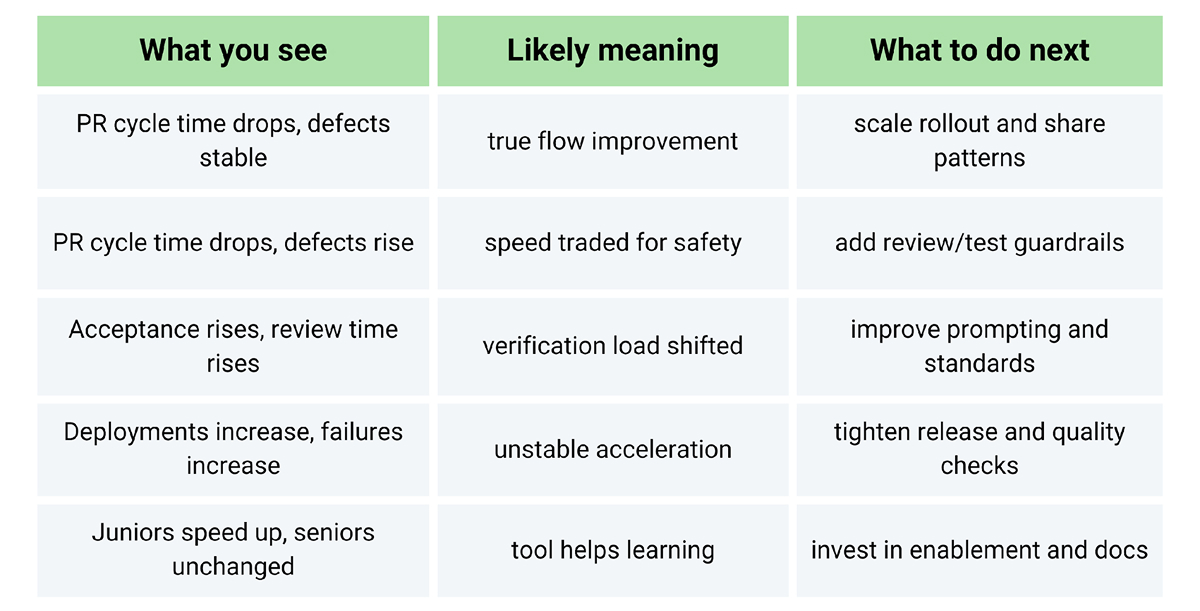

Interpreting productivity gains (so you don’t reward the wrong behavior)

Once you have metrics in place, the next challenge is interpretation. There are some important practices you should follow.

Treat speed as a hypothesis, not a conclusion

Credible studies are showing large improvements in task speed in controlled settings (for example, a controlled experiment reported faster completion of a specific task with Copilot access).

But there are also real-world experiments showing the opposite for experienced developers on complex, familiar repositories where time shifts into prompting, waiting, reviewing, and correcting.

So instead of declaring “Copilot improves productivity,” evaluate it like this:

- Where did time move?

- Did lead time improve without raising the failure rate?

- Did you review the load spike?

- Did the incident load change?

- Did juniors improve faster without creating maintainability debt?

Conclusion

Copilot isn’t just “a faster keyboard.” It’s a workflow change that can improve drafting speed, reduce context switching, and help developers stay in motion, but only if the person behind it and the support system absorb the change responsibly.

If you measure only usage, you’ll mistake adoption for impact. If you measure only speed, you’ll miss quality debt. The teams that win treat Copilot like any other engineering investment: set baselines, track flow and stability, segment results, and enforce guardrails. Done well, you end up with a clear, defensible story about what changed and why, so you can scale what works and fix what doesn’t, instead of arguing about vibes.

FAQ

1. Can Copilot improve productivity without improving code quality?

Sure. While it may help with drafting and decrease time spent on a blank page, it may increase bugs or architectural inconsistencies if teams adopt suggestions without checking. The solution isn’t to restrict usage; it’s to put in place guardrails: tests, reviewing standards, and clear ownership of system design.

2. What metrics best capture Copilot’s real impact on teams?

Use a balanced set of metrics, including Copilot adoption and engagement metrics, delivery flow metrics (lead time and PR cycle time), quality metrics (change failure rate and incidents), and developer experience (survey focus time). GitHub Copilot usage metrics dashboard/API gives usage metrics to capture adoption, but to provide evidence of impact, you will still need delivery and quality metrics.

3. How long does it take to see measurable productivity gains from Copilot?

Changes can be seen in engagement metrics within weeks, but for delivery and quality metrics, it usually takes 4 to 8 weeks, respectively. This is because you need time to complete a full delivery cycle and to move the work through coding, review, deployment, and operations.

4. Does Copilot benefit senior and junior engineers equally?

Not always. Support scaffolding and learning; juniors and seniors place less value on context-heavy, deep work. There is sufficient variance in real-world outcomes, so segmentation by team and work type is necessary.

5. How should engineering leaders report Copilot ROI to executives?

Lead with business-relevant outcomes, backed by delivery and quality metrics:

- Faster lead time (with stable failure rate)

- More frequent, safer deployments

- Reduced incident load or recovery time

- Improved throughput on priority work

- Use Copilot adoption/usage metrics as supporting context, not as the headline.