The modern business methods require diverse business intelligence tools as they capture a lot of data from different sources. Although data collection is pretty straightforward, transforming them into meaningful information is challenging.

Data platform engineering solves this problem. It focuses on the process of designing and managing the systems that collect, store, and organize different kinds of data. These systems allow various stakeholders to access data whenever they want. Whatever the intention, platform engineers always make sure that the data is accurate, accessible, and secure.

Core Responsibilities of Data Platform Engineers

A data platform engineer wears many hats, often blending the responsibilities of infrastructure, software, and data engineering into one role. Here’s a breakdown of their day-to-day work:

- Designing data architecture: They decide how data moves through the system, from collection to storage and analysis. This includes choosing tools, defining schemas, and setting the rules (governance) that keep everything consistent.

- Building and maintaining pipelines: Whether batch or real-time, engineers create pipelines to bring data from various sources. These pipelines handle the transformation and ensure the data ends up in the right place, in the right format.

- Security and compliance: Protecting sensitive information and meeting legal standards (like GDPR) is a non-negotiable part of the job. This involves access controls, encryption, and regular audits.

- Storage optimization: Storing data is expensive and complex. Platform engineers pick the right mix of technologies and optimize how the data is indexed or partitioned to keep things running smoothly without overspending.

It’s a combination of planning, building, monitoring, and constantly improving, often forming the foundation of a successful engineering data management platform.

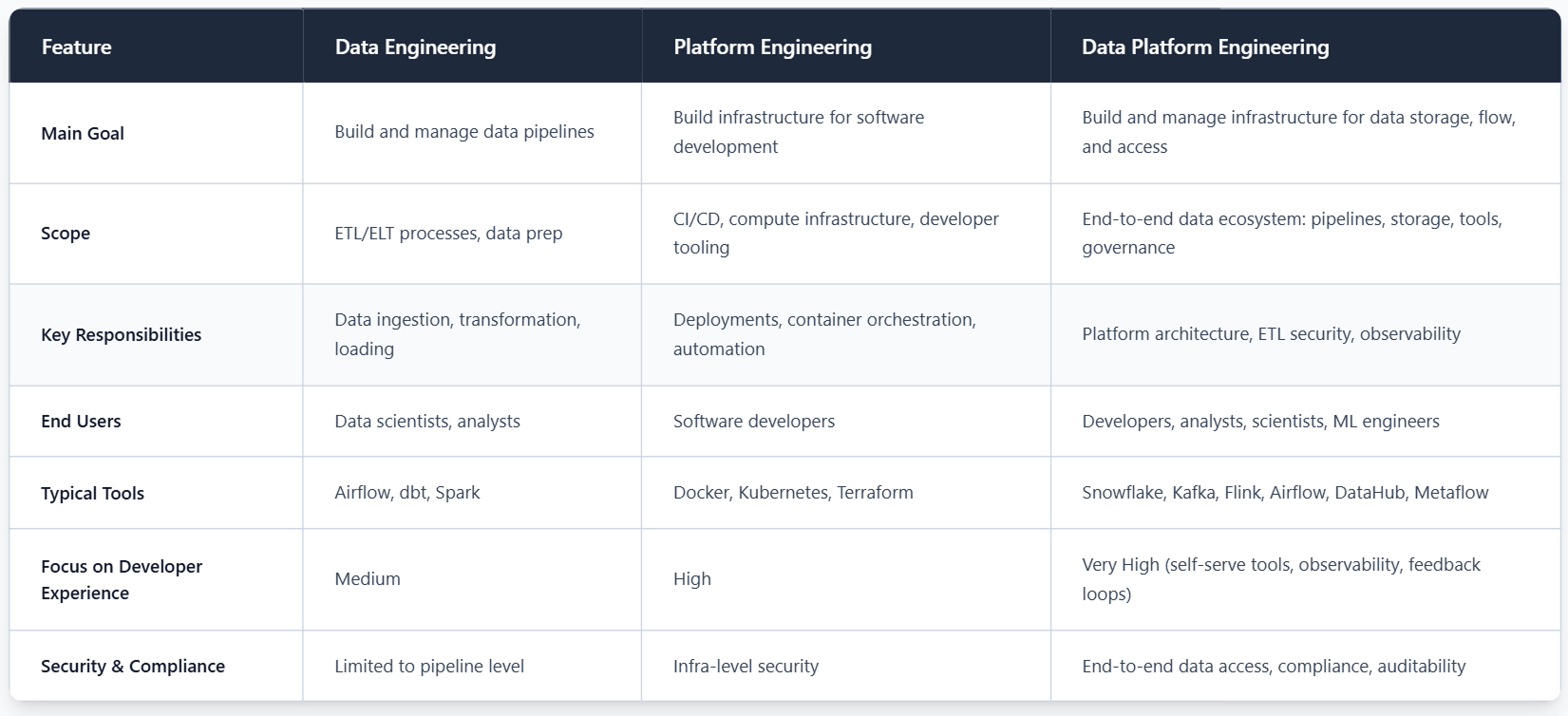

How is this different from other Engineering Roles?

There’s a lot of overlap between data engineering, platform engineering, and data platform engineering, but they each focus on different slices of the pie.

Agile Data Engineering Platforms

As companies use more and more data, they need systems that can keep up. Agile data platforms are built to be flexible so they can grow quickly, handle changes easily, and keep working smoothly without causing problems.

Some of the common characteristics include:

- Modular architecture: Instead of one big system, Agile data platforms are made of smaller, replaceable parts.

- Cloud-based architecture: Many teams use services like AWS, GCP, or Azure for flexibility and scale.

- Support for real-time and batch data: Depending on the use case, some data comes in as events happen, while other jobs run on a schedule.

- Built-in monitoring and observability: Engineers need visibility into how data flows, where it fails, and how to fix it quickly.

A well-designed platform doesn’t just move data; it helps people trust and use it.

Making Developer Experience a Priority

If the data platform is hard to use or doesn’t work well, people won’t use it. That’s why skilled data platform engineers focus on making it user-friendly. They treat it like a product and ask:

- Is it easy to get the data you need?

- Are the tools simple to use?

- Can teams run queries without everything slowing down?

They build dashboards to monitor usage, track slow queries, and collect feedback. They create internal tools and documentation that make sense. It’s not about flashy UIs; it’s about making things work without friction.

Best Practices

There’s no perfect blueprint, but some habits make a difference:

- Keep things simple and modular: Don’t build massive monoliths. Break things into small parts that can evolve.

- Automate boring stuff: Set up automatic schema checks, data validations, and alerts to get notified when something goes wrong.

- Write things down: Good internal documents can save hours and prevent a lot of back-and-forth messages.

- Prioritize data quality: If people can’t trust the data, they won’t use it, or they’ll use it and get the wrong answers.

What’s Next for Data Platform Engineering?

- Smarter tooling: Machine learning is starting to help find problems in data pipelines or warn when something might go wrong. It’s not perfect, but it makes things easier.

- Decentralized data ownership: The idea of a data mesh is growing. Instead of one big team owning all the data, each team takes care of its own data like a product.

- Low-code for data: More tools are being built so that even people who don’t code can create data workflows. These tools are getting easier to use and more powerful.

- Streaming as the norm: Waiting hours for reports is becoming outdated. Teams want live data and instant insights, and streaming data is becoming the new standard.

Final thoughts

Data platform engineering isn’t about using the trendiest tools. It’s about making sure people across the company can actually use the data in their day-to-day work. It’s the behind-the-scenes work that keeps everything running and often only gets noticed when something goes wrong.

If you’re building a data team or updating your systems, putting effort into data platform engineering is a smart choice. It’s not just about tech; it’s about making your data truly useful for your business.